AWS Pi Day, celebrated annually on March 14 (3.14), highlights the latest innovations in data management, analytics, and artificial intelligence (AI) from Amazon Web Services (AWS). What began in 2021 to mark the 15th anniversary of Amazon Simple Storage Service (Amazon S3) has evolved into a major event. It showcases how cloud technologies are reshaping the landscape of data and AI.

This year’s AWS Pi Day emphasizes how to accelerate analytics and AI innovation by establishing a unified data foundation on AWS. With the ongoing transformation of the data landscape, AI is now a critical component of most enterprise strategies, and analytics and AI workloads are increasingly converging around the same sets of data and workflows. Organizations need a simple way to access all their data and use their preferred analytics and AI tools within a single, integrated experience. AWS Pi Day 2025 introduces new capabilities designed to help you develop integrated data experiences.

The Next Generation of Amazon SageMaker: The Data and AI Hub

At re:Invent 2024, AWS unveiled the next generation of Amazon SageMaker. This platform is designed to be the central hub for all your data, analytics, and AI activities. SageMaker provides all the components needed for data exploration, preparation, integration, processing of big data, fast SQL analytics, development and training of machine learning (ML) models, and the creation of applications powered by generative AI.

With this new generation of Amazon SageMaker, the SageMaker Lakehouse offers unified access to your data, while the SageMaker Catalog assists you in meeting your governance and security requirements. For detailed information, you can review the corresponding launch blog post.

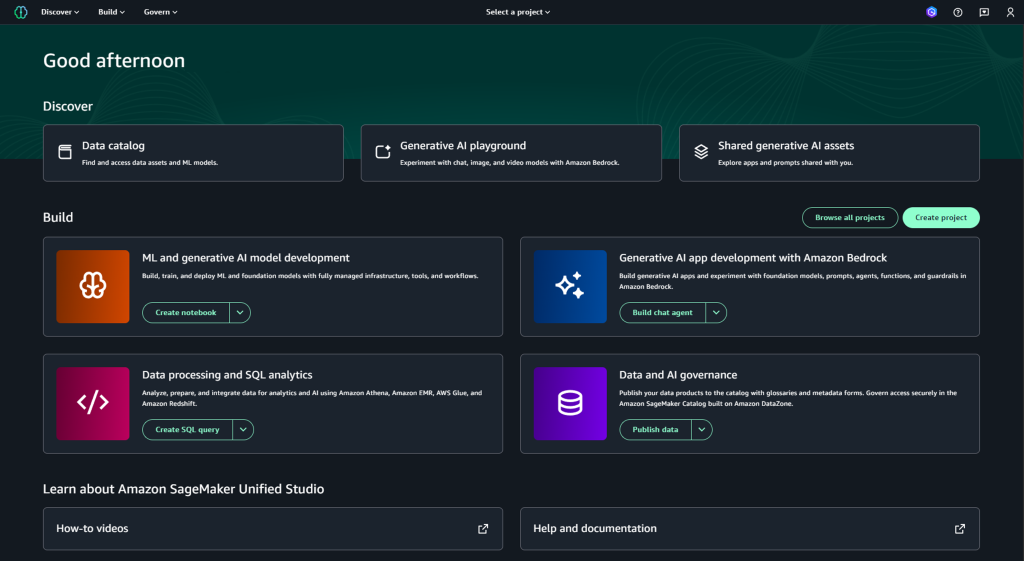

At the core of the next-generation Amazon SageMaker is SageMaker Unified Studio, a single development environment for data and AI that enables you to utilize your data and tools for both analytics and AI. SageMaker Unified Studio is now generally available, facilitating collaboration between data scientists, analysts, engineers, and developers across data, analytics, AI workflows, and application development. It integrates tools from AWS analytics, AI, and ML including data processing, SQL analytics, ML model development, and generative AI application development into a single, cohesive user experience. Analytics leaders at AWS have provided further insight into the benefits of SageMaker Unified Studio in their blog post, “Accelerate analytics and AI innovation with the next generation of Amazon SageMaker.”

SageMaker Unified Studio also integrates certain capabilities from Amazon Bedrock. Users can now swiftly prototype, customize, and share generative AI applications, using foundation models (FMs) and advanced features such as Amazon Bedrock Knowledge Bases, Amazon Bedrock Guardrails, Amazon Bedrock Agents, and Amazon Bedrock Flows. This feature allows for the development of customized solutions tailored to your requirements and responsible AI guidelines, all within the SageMaker environment.

In addition, Amazon Q Developer is now generally available in SageMaker Unified Studio. Amazon Q Developer provides generative AI-powered assistance for data and AI development, assisting with tasks such as writing SQL queries, building extract, transform, and load (ETL) jobs, and troubleshooting. It is available in both the Free and Pro tiers for existing subscribers. Further details regarding the general availability of SageMaker Unified Studio can be found in this recent blog post.

During re:Invent 2024, AWS also announced Amazon SageMaker Lakehouse as part of the next generation of SageMaker. SageMaker Lakehouse combines all your data across Amazon S3 data lakes, Amazon Redshift data warehouses, and third-party and federated data sources. It supports the development of powerful analytics and AI/ML applications using a single copy of your data. SageMaker Lakehouse allows you to access and query data in place using Apache Iceberg-compatible tools and engines. Furthermore, zero-ETL integrations automate the process of transferring data into SageMaker Lakehouse from AWS data sources like Amazon Aurora or Amazon DynamoDB, as well as from applications such as Salesforce, Facebook Ads, Instagram Ads, ServiceNow, SAP, Zendesk and Zoho CRM. The complete list of available integrations is available in the SageMaker Lakehouse FAQ.

Building a Data Foundation with Amazon S3

Building a solid data foundation is essential for accelerating analytics and AI workloads. It enables organizations to efficiently manage, find, and utilize their data assets at any scale. As the world’s leading platform for building data lakes, Amazon S3 provides the essential foundation for this transformation. Amazon S3 currently holds over 400 trillion objects, processes 150 million requests per second and stores exabytes of data. A decade ago, very few customers stored over a petabyte of data on S3. Today, thousands of customers have surpassed that threshold.

To assist users in reducing the operational burden associated with managing their tabular data in S3 buckets, AWS announced Amazon S3 Tables at AWS re:Invent 2024. S3 Tables are the first cloud object store that natively supports Apache Iceberg and are specifically engineered for analytics applications, resulting in up to three times faster query throughput and up to ten times more transactions per second compared to self-managed tables.

Today, AWS is announcing the general availability of Amazon S3 Tables integration with Amazon SageMaker Lakehouse. With this integration, you can easily access S3 Tables from various AWS analytics services such as Amazon Redshift, Amazon Athena, Amazon EMR, and AWS Glue, as well as Apache Iceberg–compatible engines like Apache Spark and PyIceberg. SageMaker Lakehouse also provides centralized management of fine-grained data access permissions for S3 Tables and other data sources, ensuring consistency across all engines.

For users who utilize a third-party catalog or have a custom catalog implementation, or who need simple read and write access to tabular data in a single table bucket, AWS has introduced new APIs compatible with the Iceberg REST Catalog standard. This allows any Iceberg-compatible application to seamlessly create, update, list, and delete tables within an S3 table bucket. To ensure unified data management, data governance, and detailed access controls for all tabular data, users can integrate S3 Tables with SageMaker Lakehouse.

To facilitate easy access to S3 Tables, AWS has launched updates in the AWS Management Console. Users can now create a table, populate it with data, and query it directly from the S3 console using Amazon Athena, streamlining the process of getting started and analyzing data in S3 table buckets.

SageMaker Unified Studio provides a unified environment for data and AI development.

Create and query tables directly from the S3 console using Amazon Athena.

Since re:Invent 2024 AWS has continuously added new capabilities to S3 Tables. For instance, the CreateTable API now supports schema definition, allowing the creation of up to 10,000 tables in an S3 table bucket. S3 Tables have also been launched in eight additional AWS Regions, most recently in Asia Pacific (Seoul, Singapore, Sydney) on March 4, with more regions to follow. You can consult the S3 Tables AWS Regions page for an up-to-date list of the eleven Regions where S3 Tables are currently available.

Amazon S3 Metadata—announced during re:Invent 2024—has been generally available since January 27. It provides the fastest and easiest method to explore and understand your S3 data through automated, effortlessly-queried metadata that updates in near real-time. S3 Metadata works in conjunction with S3 object tags, which allows you to logically group data for various reasons, such as applying IAM policies, specifying tag-based filters, and selectively replicating data to different Regions. In Regions where S3 Metadata is available, you can capture and query custom metadata stored as object tags. To reduce the cost associated with object tags when using S3 Metadata, Amazon S3 has reduced the pricing for S3 object tagging by 35 percent across all Regions.

AWS Pi Day 2025

Over the years, AWS Pi Day has highlighted key advancements in cloud storage and data analytics. The virtual AWS Pi Day event will offer a wide range of topics designed for developers, technical decision-makers, data engineers, AI/ML practitioners, and IT leaders. Key features include deep dives, live demos, and expert sessions on all of the services and capabilities discussed in this post. By attending the event, you can learn how to accelerate your analytics and AI innovation.

You will discover how to utilize S3 Tables with native Apache Iceberg support and S3 Metadata to build scalable data lakes. These data lakes effectively serve both traditional analytics and emerging AI/ML workloads. You will also learn about the next generation of Amazon SageMaker, the central hub for all your data, analytics, and AI, to help your teams work together and build more quickly from a unified studio. This will provide access to all your data, whether it is stored in data lakes, data warehouses, or third-party or federated data sources.

AWS Pi Day 2025 is a key event for those looking to stay informed about the latest cloud trends. Whether you are building data lakehouses, training AI models, developing generative AI applications, or optimizing analytics workloads, the insights offered can assist you in maximizing the value of your data.

Tune in and explore the latest innovations in cloud data. Make sure not to miss the chance to engage with AWS experts, partners, and customers who are shaping the future of data, analytics, and AI. If you missed the virtual event held on March 14, visit the event page at any time. All content will be available on-demand.