OpenAI Expands Voice AI Offerings with New Models

OpenAI is continuing its push into voice AI with the release of three new models designed for developers: gpt-4o-transcribe, gpt-4o-mini-transcribe, and gpt-4o-mini-tts. These models, built upon the existing GPT-4o technology, aim to provide improved transcription accuracy and customizable speech synthesis, enhancing the tools available for third-party developers. The company has also launched a demo site, OpenAI.fm, showcasing various voice customizations.

These new models come at a time when OpenAI has faced public scrutiny regarding its voice AI offerings. This includes a prior instance of criticism regarding the use of a voice model that sounded similar to actress Scarlett Johansson. The company clarified that users will be able to control how their AI voices sound. Users will be able to change accents, pitch, tone, and emotions.

Enhanced Transcription and Speech Capabilities

The new models are based on the GPT-4o model that powers the ChatGPT text and voice experience, but have been post-trained with additional data to excel at transcription and speech.

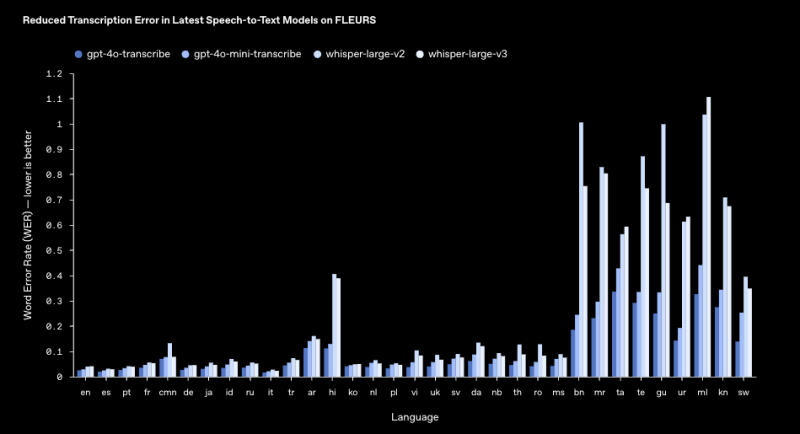

OpenAI’s technical staff member, Jeff Harris, showed in a demo how the voice can be customized using text prompts, allowing it to sound like a cackling mad scientist or a calm yoga instructor. These advanced models leverage noise cancellation and semantic voice activity detection to improve accuracy. Performance has been refined in noisy environments while recognizing diverse accents and varying speech speeds across more than 100 languages. This builds upon the capabilities of the company’s Whisper open-source text-to-speech model. Based on the company’s website, error rates using the new gpt-4o-transcribe models have fallen significantly when identifying words across 33 languages. In English, for example, the error rate is just 2.46%.

Applications and Developer Tools

The models are designed to work well in environments like customer call centers, meeting note transcription, and AI-powered assistants. The new Agents SDK launched last week makes it easy for developers to add voice interactions to text-based language models like GPT-4o.

According to Harris, these models introduce streaming speech-to-text, allowing developers to receive a real-time text stream, making conversations feel more natural. For low-latency, real-time AI voice experiences, OpenAI recommends using its speech-to-speech models in the Realtime API.

Pricing and Competition

The new models are accessible immediately via OpenAI’s API with the following pricing structure:

- gpt-4o-transcribe: $6.00 per 1M audio input tokens (~$0.006 per minute)

- gpt-4o-mini-transcribe: $3.00 per 1M audio input tokens (~$0.003 per minute)

- gpt-4o-mini-tts: $0.60 per 1M text input tokens, $12.00 per 1M audio output tokens (~$0.015 per minute)

The AI transcription and speech space is seeing increased competition, with companies like ElevenLabs and Hume AI offering distinct solutions.

Early Industry Adoption

OpenAI has shared testimonials from companies who have integrated the new audio models. EliseAI, which focuses on property management automation, noted that the text-to-speech model enabled more natural and emotionally rich interactions with tenants. Decagon, which builds AI-powered voice experiences, saw a 30% improvement in transcription accuracy by using OpenAI’s speech recognition model.

Reactions and Future Plans

While the new models have largely been well-received, not all reactions have been positive. Ben Hylak, co-founder of Dawn AI, suggested that the announcement “feels like a retreat from real-time voice.” The launch was also preceded by an early leak on X (formerly Twitter).

Looking ahead, OpenAI will continue refining its audio models, exploring custom voice capabilities, and investing in multimodal AI, including video.