Introduction of SWE-PolyBench

Amazon has introduced SWE-PolyBench, a groundbreaking multilingual benchmark designed to evaluate the capabilities of AI coding agents across diverse programming languages and real-world scenarios. This new benchmark addresses the limitations of existing evaluation frameworks by providing a more comprehensive and representative assessment of AI coding assistants.

Limitations of Previous Benchmarks

Previous benchmarks, such as SWE-Bench, have been instrumental in advancing AI coding agents but have shown significant limitations. SWE-Bench, for instance, was restricted to Python repositories, with the majority of tasks being bug fixes and a significant over-representation of the Django repository. These limitations led to a narrow evaluation scope that didn’t accurately reflect real-world software development complexities.

Key Features of SWE-PolyBench

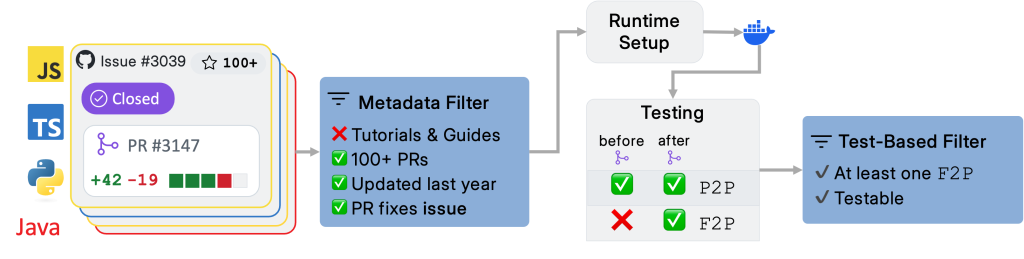

SWE-PolyBench overcomes these limitations by introducing several key features:

- Multi-Language Support: The benchmark includes tasks in four major programming languages: Java (165 tasks), JavaScript (1017 tasks), TypeScript (729 tasks), and Python (199 tasks).

- Extensive Dataset: With 2110 instances drawn from 21 diverse repositories, SWE-PolyBench matches the scale of SWE-Bench while offering broader repository coverage.

- Task Variety: The benchmark includes a mix of task categories such as bug fixes, feature requests, and code refactoring, providing a more comprehensive evaluation of AI coding agents’ capabilities.

- Rapid Experimentation: SWE-PolyBench500, a stratified subset of 500 issues, enables efficient experimentation and testing.

- Comprehensive Leaderboard: A detailed leaderboard with multiple metrics allows for transparent benchmarking and comparison of different AI coding assistants.

Enhanced Evaluation Metrics

SWE-PolyBench introduces a sophisticated set of evaluation metrics to assess AI coding agents’ performance comprehensively:

- Pass Rate: Measures the proportion of tasks successfully solved by the AI coding agent.

- File-Level Localization: Evaluates the agent’s ability to identify the correct files that need modification.

- CST Node-Level Retrieval: Assesses the agent’s capability to pinpoint specific code structures requiring changes using Concrete Syntax Tree (CST) node analysis.

These metrics provide a more nuanced understanding of AI coding assistants’ strengths and weaknesses in navigating complex codebases.

Performance Insights

The evaluation of open-source coding agents on SWE-PolyBench revealed several key findings:

- Language Proficiency: All agents performed best in Python, highlighting the current bias towards Python in training data and existing benchmarks.

- Complexity Challenges: Performance degraded significantly as task complexity increased, particularly for tasks requiring modifications to multiple files or complex code structures.

- Task Specialization: Different agents showed varying strengths across different task categories, indicating the need for specialized improvements.

- Context Importance: The informativeness of problem statements had a significant impact on success rates across all agents.

Community Engagement and Future Directions

SWE-PolyBench and its evaluation framework are publicly available, inviting the global developer community to contribute to and build upon this work. As AI coding assistants continue to evolve, benchmarks like SWE-PolyBench will play a crucial role in ensuring these tools meet the diverse needs of real-world software development across multiple programming languages and task types.

Researchers and developers can access the SWE-PolyBench dataset on Hugging Face, the research paper on arXiv, and the evaluation harness on the project’s GitHub repository. A dedicated leaderboard tracks the performance of various AI coding agents, fostering healthy competition and driving innovation in the field.