Apple Explains AI-Generated App Store Review Summaries in iOS 18.4

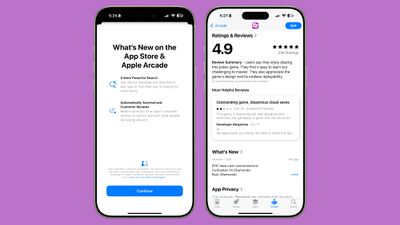

Apple has introduced a new App Store feature with the launch of iOS 18.4 that summarizes multiple user reviews to provide an at-a-glance summary of what people think of an app or game. In a recent blog post on its Machine Learning Research blog, Apple detailed how these review summaries work.

The company is using a multi-step large language model (LLM) system to generate these summaries, aiming to create overviews that are inclusive, balanced, and accurately reflect user sentiment. Apple prioritizes “safety, fairness, truthfulness, and helpfulness” in its summaries while addressing the challenges of aggregating App Store reviews.

How Apple’s LLM Works

- Apple’s LLM first ignores reviews containing spam, profanity, or fraud.

- Remaining reviews are processed through a sequence of LLM-powered modules that:

- Extract key insights from each review

- Aggregate recurring themes

- Balance positive and negative feedback

- Generate a summary (around 100-300 characters in length)

Apple uses specially trained LLMs for each step, ensuring summaries accurately reflect user sentiment. During development, thousands of summaries were reviewed by human raters to assess factors like helpfulness, composition, and safety.

Challenges in Review Summarization

- Reviews can change with new app releases, features, and bug fixes, requiring dynamic adaptation.

- Reviews vary in length and may include off-topic comments or noise that the LLM needs to filter out.

Apple’s approach to review summarization represents a significant application of AI in enhancing user experience on the App Store. For those interested in the technical details, Apple’s full blog post provides a deeper dive into the summary generation process.

The introduction of AI-generated review summaries marks a step forward in how Apple aggregates user feedback, potentially influencing how users perceive and interact with apps on the platform.