The Emergence of Artificial Superintelligence

The rapid advancement of artificial intelligence (AI) has sparked intense debate about its fundamental limits. The concept of artificial superintelligence (ASI), once a staple of science fiction, is now being seriously considered by experts. ASI refers to a hypothetical AI system that surpasses human intelligence in a wide range of tasks.

Traditionally, AI research has focused on replicating specific human capabilities, such as visual analysis, language parsing, or navigation. While AI has achieved superhuman performance in narrow domains like games, the ultimate goal is to create artificial general intelligence (AGI) that combines multiple capabilities. AGI is seen as a stepping stone to ASI.

Experts like Tim Rocktäschel, professor of AI at University College London, believe that once AI reaches human-level capabilities, it may not be long before it achieves superhuman capabilities across the board. This could lead to an “intelligence explosion,” a term coined by British mathematician Irving John Good in 1965.

The implications of ASI are enormous. On the positive side, it could solve some of humanity’s most pressing challenges, such as food, education, healthcare, energy, and transportation, by removing the friction from their creation and dissemination. However, this could also lead to significant job displacement and social unrest if not managed properly.

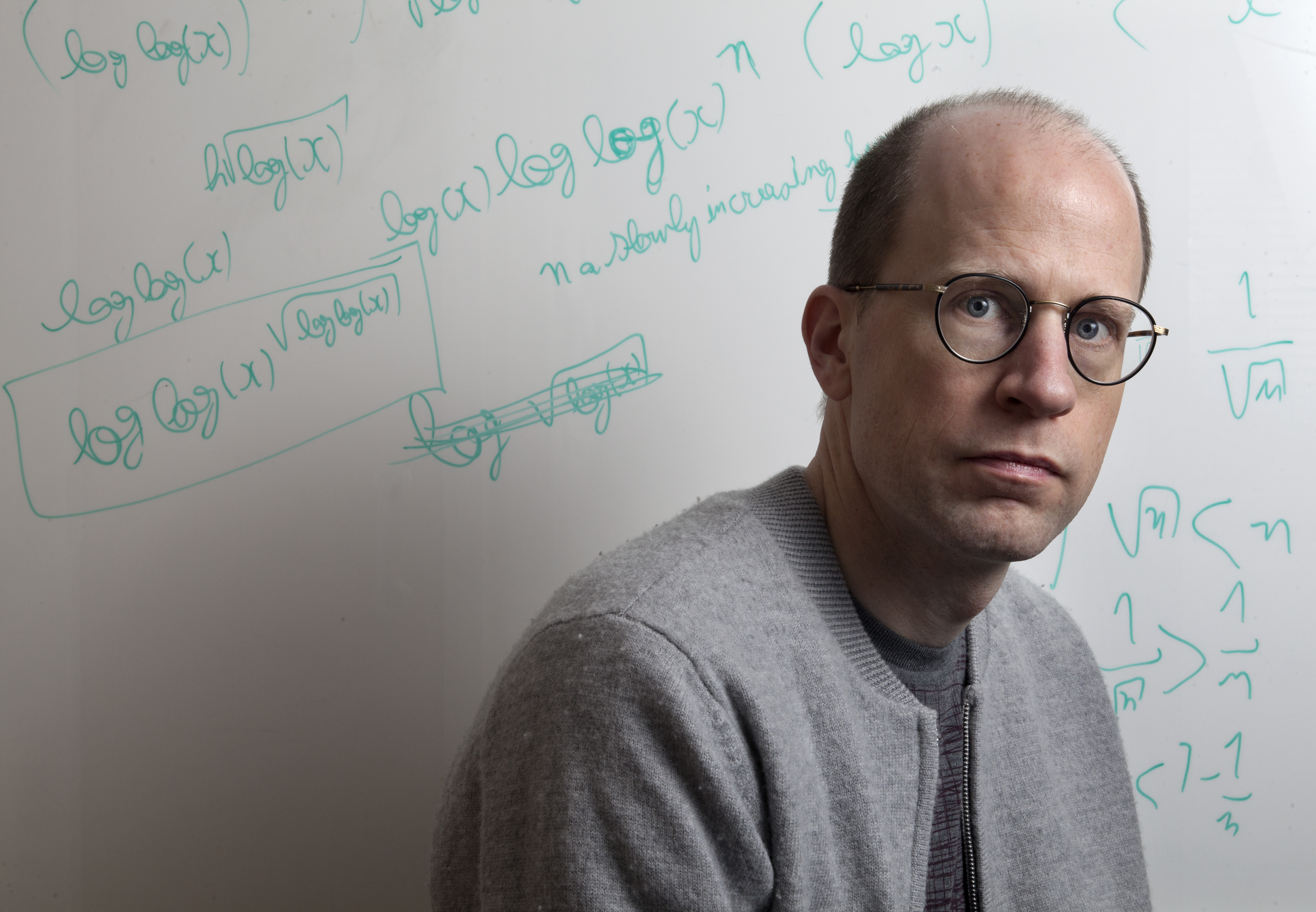

Moreover, there are concerns about controlling an entity much more intelligent than humans. Philosopher Nick Bostrom has argued that an ASI’s goals may not be aligned with human values, potentially leading to catastrophic outcomes. His thought experiment about a super-capable AI tasked with producing paperclips illustrates the risks of unaligned AI.

To mitigate these risks, experts are exploring ways to ensure AI alignment with human values. Daniel Hulme, CEO of Satalia and Conscium, is working on building AI with a “moral instinct” by evolving it in virtual environments that reward cooperation and altruism.

While some experts are optimistic about developing safeguards, others are more skeptical. Alexander Ilic, head of the ETH AI Center, notes that current approaches to measuring AI progress may be misleading and that today’s dominant approach may not lead to models that can carry out useful tasks in the physical world or collaborate effectively with humans.

In conclusion, the possibility of ASI presents both tremendous opportunities and significant challenges. As experts continue to debate its feasibility and implications, it is clear that careful consideration and planning are necessary to ensure that ASI, if it emerges, benefits humanity.