The Risks and Potential of AI in Law and Policy

The emergence of AI-assisted tools in the legal profession has been rapid, offering potential benefits such as lower costs and increased efficiency. However, these tools also pose significant risks, particularly the generation of ‘legal hallucinations’ – fictitious case citations or information that sounds plausible but is entirely fabricated.

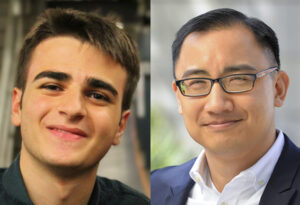

Stanford Law researchers Professor Dan Ho and JD/PhD student Mirac Suzgun have been studying these issues. Their research, published in papers such as ‘Profiling Legal Hallucinations in Large Language Models’ and the forthcoming ‘Hallucination-Free?’, examines the risks associated with AI-generated legal content.

The researchers found that even AI tools designed specifically for lawyers can produce hallucinations. In their studies, they tested general-purpose AI and legal AI tools, discovering hallucination rates ranging from 58% to 88% in state-of-the-art models and between one-fifth to one-third in legal AI tools.

How AI Legal Tools Work

Language models are trained on vast amounts of textual data, learning statistical patterns in language use. However, this training method can lead to hallucinations, as the models may generate information based on patterns rather than actual facts.

The researchers highlighted that these models lack ‘humility’ – they don’t know when to say ‘I don’t know’ and will provide answers even when they’re uncertain or lack relevant information. This can be particularly problematic in legal contexts where accuracy is crucial.

Challenges and Risks

One of the significant challenges is that these AI systems can misfire exactly where they’re needed most – in cases involving underrepresented individuals or complex legal issues. The researchers noted that the promise of AI improving access to justice may not be realized if these tools perform poorly in critical areas.

The Role of Human Judgment

The discussion emphasized the importance of human judgment in legal processes. While AI can assist with certain tasks like document review, it currently lacks the nuanced understanding required for complex legal decision-making.

Future Developments

The researchers suggest that instead of general-purpose chatbots, more focused tools for specific legal tasks may be more appropriate. There’s also a need for better understanding and training on the responsible use of AI in legal contexts.

As Stanford Law School continues to explore the intersection of AI and law, the research by Ho and Suzgun highlights the need for careful consideration of both the potential benefits and risks of these emerging technologies in the legal profession.