New Framework Enhances AI Reasoning Capabilities

A new framework developed by researchers at the University of Illinois, Urbana-Champaign, and the University of California, Berkeley, gives developers more control over how large language models (LLMs) ‘think,’ improving their reasoning capabilities while making more efficient use of their inference budget. The framework, called AlphaOne (α1), is a test-time scaling technique that tweaks a model’s behavior during inference without needing costly retraining.

The Challenge of Slow Thinking in AI

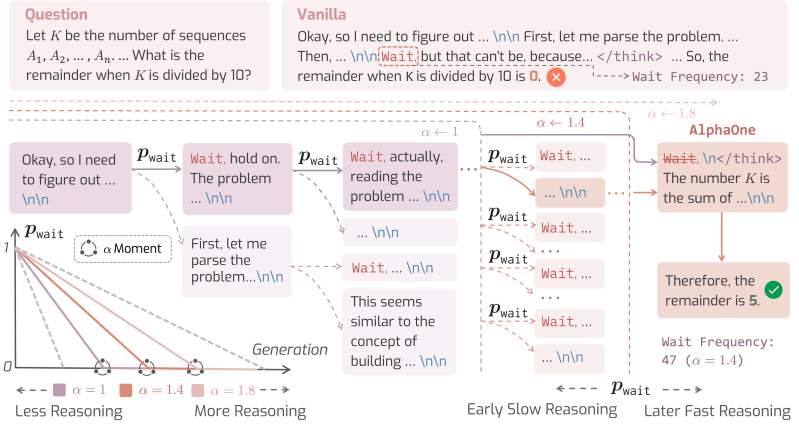

Recent advancements in large reasoning models (LRMs) have incorporated mechanisms inspired by ‘System 2’ thinking—the slow, deliberate, and logical mode of human cognition. This is distinct from ‘System 1’ thinking, which is fast, intuitive, and automatic. While incorporating System 2 capabilities enables models to solve complex problems, they don’t always effectively use their slow-thinking capabilities, often resulting in either ‘overthinking’ simple problems or ‘underthinking’ complex ones.

AlphaOne addresses this by introducing Alpha (α), a parameter that acts as a dial to scale the model’s thinking phase budget. The system strategically schedules the insertion of ‘wait’ tokens to encourage slow, deliberate thought before reaching the ‘α moment,’ after which it forces the model to switch to fast reasoning and produce its final answer.

Key Findings and Implications

The researchers tested AlphaOne on three different reasoning models across six challenging benchmarks. The results showed that a ‘slow thinking first, then fast thinking’ strategy leads to better reasoning performance in LRMs. Additionally, investing in slow thinking can lead to more efficient inference overall, reducing average token usage by ~21% and boosting reasoning accuracy by 6.15% compared to baseline methods.

For enterprise applications like complex query answering or code generation, these gains translate into improved generation quality and significant cost savings. The AlphaOne framework is expected to be released soon and could help developers build more stable, reliable, and efficient applications on top of the next generation of reasoning models.