Brain’s ‘Summaries’ vs. AI’s Full-Text Processing

Published February 27, 2025, this article examines how the human brain processes information differently than Artificial Intelligence. In recent years, Large Language Models (LLMs) like ChatGPT and Bard have shown a remarkable ability to generate text, translate languages, and analyze sentiment. However, a new study from the Technion-Israel Institute of Technology reveals critical differences in how the brain handles text compared to these AI models.

Unlike artificial language models, which process entire texts at once, the human brain creates “summaries” while reading, helping it understand what comes next. This ability allows us to process vast amounts of information over time, whether in a lecture, a book, or a podcast.

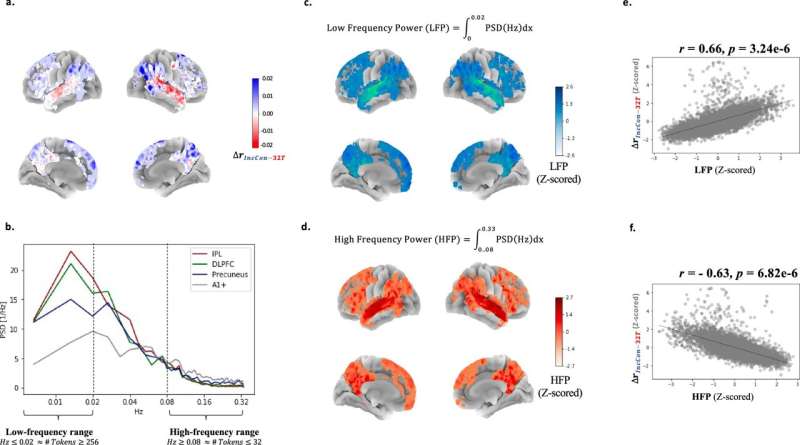

The complementary spectral analysis. Credit: Nature Communications (2025). DOI: 10.1038/s41467-025-56162-9

The research, published in Nature Communications, was led by Professor Roi Reichart and Dr. Refael Tikochinski from the Faculty of Data and Decision Sciences. It involved analyzing fMRI brain scans of 219 participants while they listened to stories. Researchers compared the brain’s activity to predictions made by existing LLMs.

They found that AI models accurately predicted brain activity for short texts (a few dozen words). However, for longer texts, AI models failed to predict brain activity accurately.

Contextual processing in the brain vs. large language models. Credit: Nature Communications (2025). DOI: 10.1038/s41467-025-56162-9

The reason for this discrepancy? While both the brain and LLMs can process short texts in parallel – analyzing all words at once—the brain uses a different strategy when faced with longer texts. Since the brain cannot process every single word simultaneously, it stores a contextual summary, a kind of “knowledge reservoir”, that it uses to interpret upcoming words. In contrast, AI models process all previously heard text at the same time, so they do not need this summarization mechanism.

To test their theory, the researchers developed an improved AI model that mimics the brain’s summarization process. This model didn’t process the full text, but instead created dynamic summaries that were then used to interpret future text. This allowed the AI model to significantly improve its predictions of brain activity; the model then supported the idea that the human brain uses this capacity to constantly summarize past information so it can make sense of new input.

Further analysis mapped specific brain regions involved in both short-term and long-term text processing, identifying the brain areas responsible for context accumulation, which enable us to understand ongoing narratives.