Introduction

We articulate a vision of artificial intelligence (AI) as normal technology, contrasting with both utopian and dystopian views that treat it as a highly autonomous entity. Viewing AI as normal technology doesn’t understate its impact but rather frames it similarly to other transformative technologies like electricity and the internet.

The Normal Technology Frame

This perspective sees AI as a tool that we can and should remain in control of. It rejects technological determinism and is guided by lessons from past technological revolutions. The normal technology frame emphasizes the slow and uncertain nature of technology adoption and diffusion, and the role of institutions in shaping AI’s trajectory.

The Speed of Progress

The impact of AI is materialized not when methods improve, but when those improvements are translated into applications and diffused through productive sectors. There are speed limits at each stage, including safety considerations that limit the scaling of new technologies.

Safety-Critical Applications

AI diffusion in safety-critical areas is slow due to regulatory requirements and the need for interpretability. Examples include medical devices and high-frequency trading systems.

Organizational and Institutional Change

Adoption is limited by the speed of human, organizational, and institutional change. The diffusion of general-purpose technologies occurs over decades, not years.

Risks and Mitigation

We consider five types of risks: accidents, arms races, misuse, misalignment, and systemic risks. Our view is that deployers and developers should have primary responsibility for mitigating accidents, with market forces and safety regulation playing crucial roles.

Arms Races and Misuse

Arms races are sector-specific and should be addressed through sector-specific regulations. The primary defenses against misuse must be located downstream of models, focusing on existing protections against non-AI threats adapted for AI-enabled attacks.

Misalignment and Systemic Risks

Catastrophic misalignment is considered a speculative risk. Normal AI may introduce many kinds of systemic risks, including bias, job losses, and inequality, which are more important than catastrophic risks.

Policy Implications

Policymakers should center on resilience, taking actions to improve our ability to deal with unexpected developments. Reducing uncertainty through research funding, monitoring AI use, and guidance on evidence is crucial.

Resilience Approach

Resilience combines elements of ex ante and ex post approaches, managing change to protect core values. It includes societal resilience, prerequisites for effective technical defenses, and interventions that help regardless of AI’s future.

Avoiding Nonproliferation

Nonproliferation policies are infeasible to enforce and lead to single points of failure. They decrease competition and increase market concentration, making the system brittle against shocks.

Realizing AI Benefits

Progress is not automatic; there are roadblocks to AI diffusion. Policy can mitigate these by promoting AI literacy, workforce training, and diffusion-enabling regulation.

Regulation and Diffusion

Regulation versus diffusion is a false tradeoff. Nuanced regulation can promote beneficial AI adoption while addressing risks.

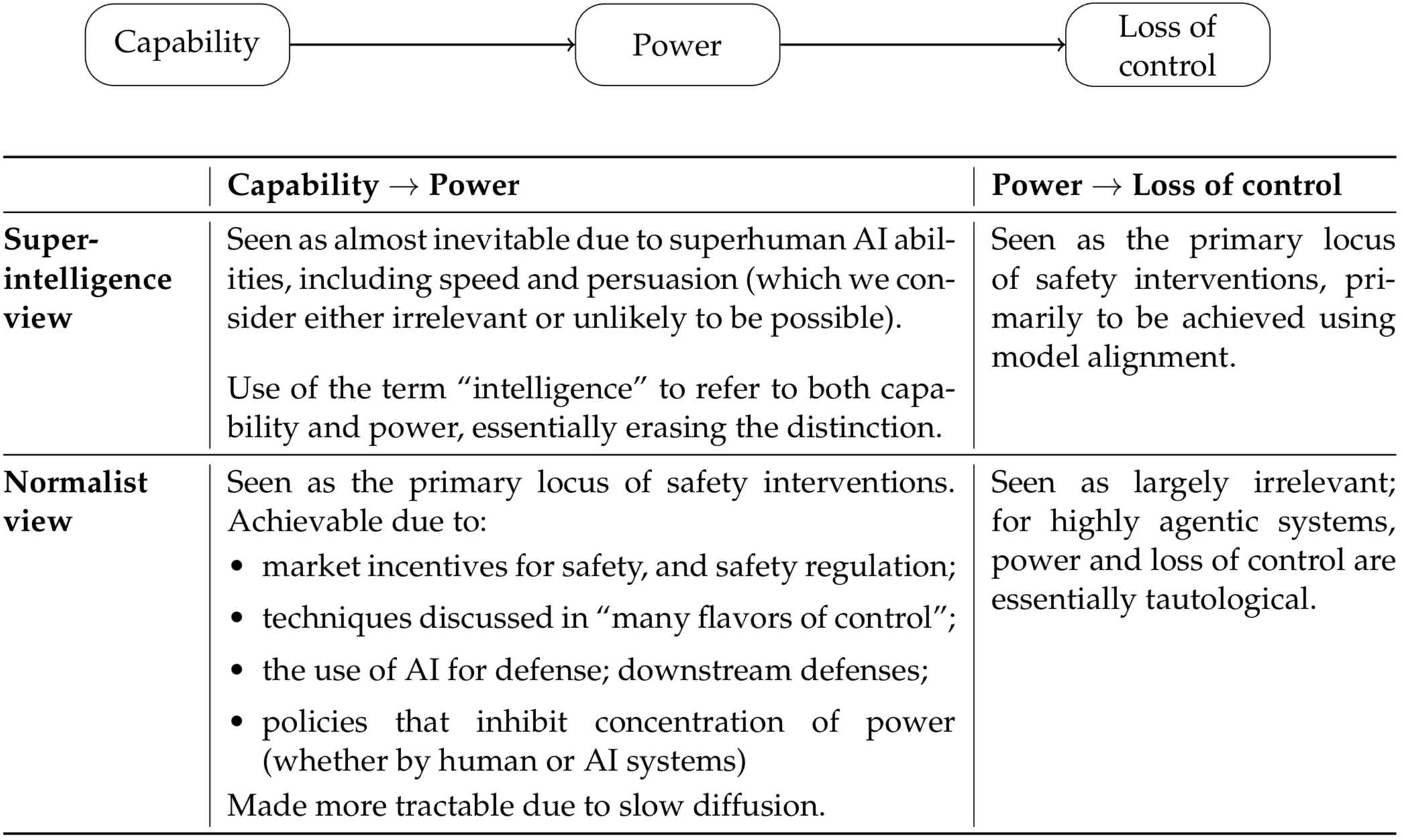

Conclusion

The normal technology view stands in contrast to the superintelligence view. Understanding these different worldviews can enable greater mutual understanding among stakeholders with differing opinions on AI progress, risks, and policy.