Introduction

The integration of artificial intelligence (AI) into healthcare, particularly for predicting adverse events, holds significant potential but also presents substantial challenges. This review focuses on the critical aspects of AI implementation in clinical decision support (CDS) systems, emphasizing data quality, model interpretability, and ethical considerations.

Challenges in AI Implementation

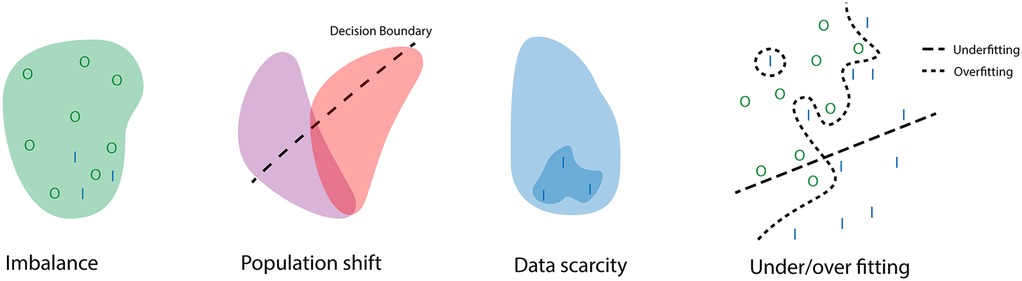

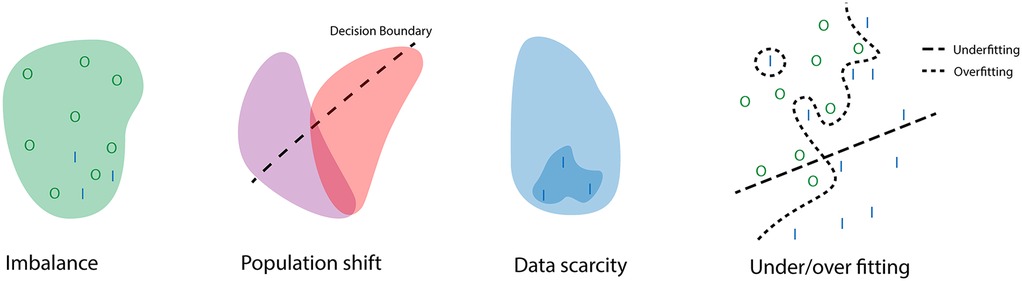

One of the primary challenges in AI-driven CDS is data acquisition and preparation. Biases in data collection can lead to erroneous assumptions during the learning process. Techniques such as resampling and data augmentation are crucial for addressing these biases. Moreover, external validation is necessary to mitigate population bias.

Data Acquisition and Preparation

Data scarcity is another significant issue, particularly for rare adverse events. This can be mitigated through data augmentation or synthetic data generation. The handling of missing data is also critical, with assumptions about the cause of missingness (MCAR, MAR, MNAR) influencing imputation strategies.

Interpretability and Trust

The lack of interpretability in AI models poses trust and transparency issues. Techniques such as global and local interpretation methods are being developed to address this. However, rigorous testing on specific hospital populations before implementation remains essential.

Clinical Translation

Successful implementation of AI in healthcare requires more than technical functionality. It involves thoughtful integration into existing workflows, training for healthcare professionals, and consideration of ethical and societal implications. Trust in AI algorithms can be fostered through transparency and rigorous testing, similar to how drugs with unknown mechanisms of action are used after thorough investigation.

Limitations and Future Directions

This review highlights the need for meticulous development, validation, and responsible implementation of AI in CDS systems. While it addresses significant challenges, it also notes limitations, including the brevity of the discussion on technical and ethical nuances.

Conclusion

Implementing AI for predicting adverse events in healthcare demands careful attention to data quality, preprocessing, model training, interpretability, and ethical considerations. Addressing these challenges is crucial for realizing AI’s transformative potential while ensuring its trustworthy integration into clinical decision-making processes.