AWS and DXC Revolutionize Multilingual Customer Support with Real-Time Voice Translation in Amazon Connect

Global businesses often face operational challenges when providing effective multilingual customer support. To address this, AWS and DXC Technology have joined forces to create a scalable voice-to-voice (V2V) translation prototype. This innovative solution transforms how contact centers handle customer interactions across different languages. This post details how AWS and DXC utilized Amazon Connect and various AWS AI services to enable this near real-time V2V translation capability.

The Challenge: Supporting Customers in Multiple Languages

In the third quarter of 2024, DXC Technology presented AWS with a significant business challenge. Their global contact centers needed to serve customers in multiple languages without the high costs associated with hiring language-specific agents, especially for languages with lower call volumes. Existing alternatives presented limitations, including communication constraints and infrastructure requirements that affected reliability, scalability, and operational costs.

To overcome these hurdles, DXC and AWS held a focused hackathon. During this event, AWS Solution Architects and DXC experts collaborated to:

- Define essential requirements for real-time translation.

- Establish latency and accuracy benchmarks.

- Design seamless integration paths with existing systems.

- Develop a phased implementation strategy.

- Prepare and test an initial proof of concept.

Business Impact

For DXC, this prototype became a key enabler, helping to maximize technical talent, transform operations, and reduce costs through:

- Optimized Technical Expertise: Hiring and matching agents based on technical skills rather than language proficiency, ensuring customers receive top-quality technical support regardless of language.

- Enhanced Global Flexibility: Removing geographical and language constraints in hiring, placement, and support delivery while maintaining consistent service quality across all languages.

- Cost Reduction: Eliminating multi-language expertise premiums, specialized language training, and infrastructure costs by utilizing a pay-per-use translation model.

- Native-Speaker Experience: Maintaining a natural conversation flow with near real-time translation and audio feedback, offering premium technical support in the customer’s preferred language.

Solution Overview

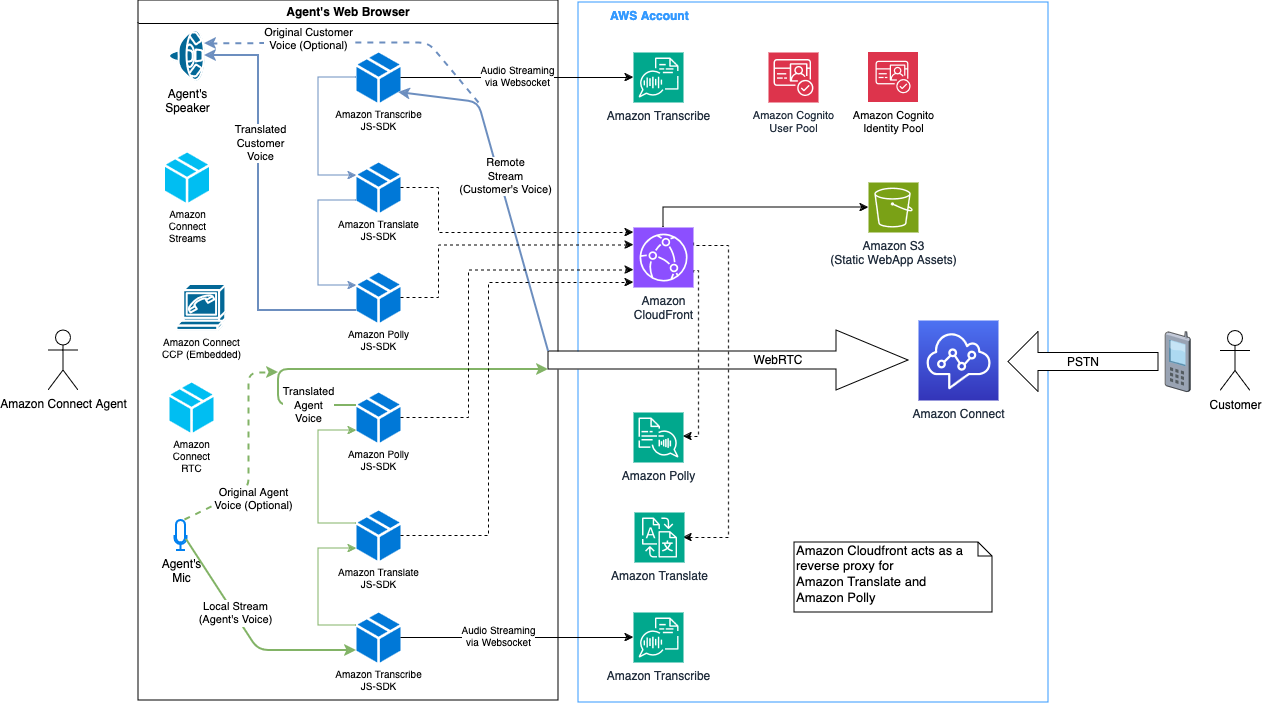

The Amazon Connect V2V translation prototype leverages advanced speech recognition and machine translation technologies to facilitate real-time conversation translation between agents and customers. This allows them to communicate in their preferred languages while enjoying seamless, natural conversations. The system comprises the following key components:

- Speech Recognition: Amazon Transcribe captures and converts the customer’s spoken language into text. This text then feeds into the machine translation engine.

- Machine Translation: Amazon Translate translates the customer’s text into the agent’s preferred language in near real-time. The translated text is then converted back into speech using Amazon Polly.

- Bidirectional Translation: The process is reversed for the agent’s response, translating their speech into the customer’s language and delivering the translated audio.

- Seamless Integration: The V2V translation project integrates with Amazon Connect, allowing agents to handle multilingual customer interactions without additional training or effort, using the Amazon Connect Streams JS and Amazon Connect RTC JS libraries. The prototype is open source and ready to be customized to fulfill your specific needs. You can also extend this prototype with other AWS AI services to further customize the translation capabilities.

The above diagram illustrates the solution architecture.

The screenshot illustrates a sample agent web application.

The user interface includes three key sections:

- Contact Control Panel: A softphone client using Amazon Connect.

- Customer Controls: Controls for customer-to-agent interaction, including Transcribe Customer Voice, Translate Customer Voice, and Synthesize Customer Voice.

- Agent Controls: Controls for agent-to-customer interaction, including Transcribe Agent Voice, Translate Agent Voice, and Synthesize Agent Voice.

Addressing the Challenges of Near Real-Time Voice Translation

The Amazon Connect V2V sample project was designed to minimize audio processing time. However, even with these efforts, the user experience doesn’t quite emulate a natural conversation where both parties speak the same language. This is due to the specific pattern of the customer only hearing the agent’s translated speech, and vice versa.

This diagram illustrates the workflow without audio streaming add-ons.

Consider the following example workflow:

- The customer speaks in their native language for 10 seconds.

- The agent hears 10 seconds of silence.

- Audio processing takes 1–2 seconds, both parties hear silence.

- Translated speech is streamed to the agent. During this time, the customer hears silence.

- The agent speaks for 10 seconds.

- The customer hears 10 seconds of silence.

- Audio processing takes 1–2 seconds, both parties hear silence.

- Agent’s translated speech is streamed. During this time, the agent hears silence.

In this scenario, the customer experiences approximately 22-24 seconds of silence, which can create a suboptimal experience. The customer might wonder if the agent can hear them or suspect a technical issue.

Audio Streaming Add-ons: Enhancing the Conversation Experience

To provide a more natural conversation experience, the Amazon Connect V2V sample project implements audio streaming add-ons. The aim is to mimic the experience of a conversation where a translator is present.

This diagram depicts the workflow using audio streaming add-ons.

The workflow with audio streaming add-ons includes these steps:

- Customer speaks for 10 seconds.

- The agent hears the customer’s original voice (at a lower volume).

- Audio processing takes 1-2 seconds. Both agent and customer receive subtle audio feedback, such as background noise, at low volume (“Audio Feedback” enabled).

- Translated speech is streamed to the agent, and customer hears their own translated speech at a lower volume.

- Agent speaks for 10 seconds, the customer hears the agent’s original voice (at a lower volume).

- Audio processing takes 1-2 seconds, both agent and customer receive subtle audio feedback.

- Translated speech is streamed to the Customer, agent hears their own translated speech at a lower volume.

This approach replaces a long period of silence with shorter blocks of audio feedback, which is more similar to a conversation with a real-time translator.

The audio streaming add-ons contribute additional benefits:

- Voice Characteristics: The agent can receive cues about the customer’s tone, speed, and emotional state, as the agent hears the customer’s original voice at a lower volume.

- Quality Assurance: Call recordings include the customer’s and agent’s original voices, in addition to the translated speech, which enables QA teams to assess conversations more effectively.

- Transcription and Translation Accuracy: Listening to the original and translated speech within the recordings makes it easier to refine both transcription (using custom vocabularies in Amazon Transcribe) and translation accuracy (using custom terminologies in Amazon Translate).

Getting Started with Amazon Connect V2V

Ready to transform your contact center’s multilingual communication? The Amazon Connect V2V sample project is available on GitHub, and we encourage you to explore and experiment with this powerful prototype.

You can build innovative multilingual communication solutions in your contact center by following these steps:

- Clone the GitHub repository.

- Test different configurations for the audio streaming add-ons.

- Check the sample project’s limitations in the README documentation.

- Develop custom implementation strategies:

- Implement organization-specific security and compliance controls.

- Collaborate with your customer experience team to establish use case requirements.

- Balance automation with manual controls (for example, employ an Amazon Connect contact flow to set contact attributes concerning preferred languages and audio streaming add-ons).

- Use your preferred transcription, translation, and synthesis engines dependent on specific language support needs and business, legal, and regional preferences.

- Plan a phased rollout, starting with a pilot group; then, iteratively optimize transcription custom vocabularies and translation custom terminologies.

Conclusion

The Amazon Connect V2V sample project effectively demonstrates how Amazon Connect and advanced AWS AI services can reduce language barriers, improve operational flexibility, and lower support costs. Get started now and revolutionize how your contact center communicates across different languages!

About the Authors:

EJ Ferrell is a Senior Solutions Architect at AWS.