Building a Robust RAG Architecture on AWS

In the age of generative AI, data isn’t just important; it’s the foundation upon which powerful applications are built. This article examines the technical intricacies of a Retrieval-Augmented Generation (RAG) implementation, showcasing a well-rounded architecture designed for environments with strict security and compliance requirements, such as those found in government and highly regulated sectors.

The success of any RAG implementation hinges on the quality, accessibility, and organization of its underlying data. Building a robust data infrastructure using AWS services like Amazon Bedrock, Amazon SageMaker AI, Amazon Kendra, and Amazon DynamoDB in AWS GovCloud (US) creates the essential backbone for effective information retrieval and generation. This deep dive explores how organizations such as Anduril can architect their RAG implementations to harness the full potential of their data assets while maintaining stringent security and compliance standards.

AWS GovCloud (US) Foundation

At the core of the architecture described in this article is AWS GovCloud (US). This specialized cloud environment is specifically designed to handle sensitive data and meet the stringent compliance demands of government agencies. It provides comprehensive compliance with:

- Federal Risk and Authorization Management Program (FedRAMP) High

- Department of Defense (DoD) Cloud Computing (CC) Security Requirements Guide (SRG) Impact Level 5

- United States International Traffic in Arms Regulations (ITAR) compatibility

AWS GovCloud (US) also features physical separation from commercial AWS Regions, US person-only access controls, and enhanced security monitoring. This robust foundation enables secure operation while retaining the benefits of cloud computing, like agility and scalability. This allows organizations to unlock the power of RAG technology without compromising data security.

RAG Architecture Components

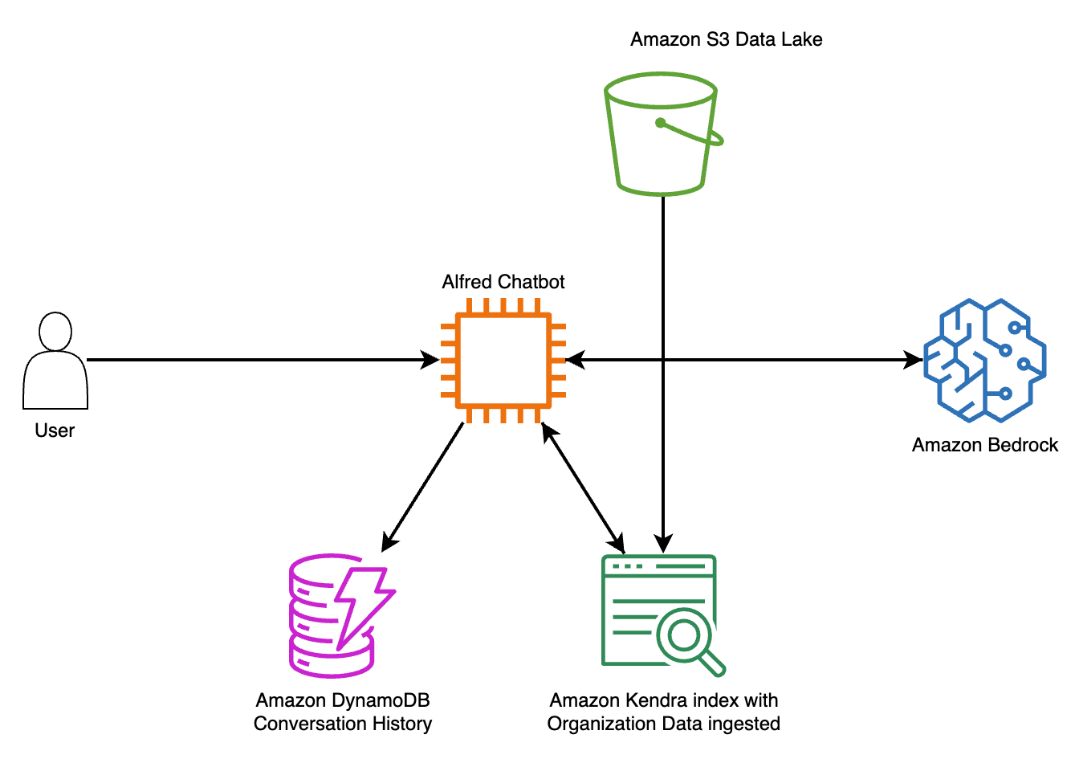

The RAG implementation detailed in this article is designed to deliver accurate and contextually relevant responses. It consists of two key components that work in tandem:

Retrieval Component:

- This component utilizes Amazon Kendra as its intelligent search service. Kendra offers natural language processing (NLP) capabilities, machine learning (ML)-powered relevance ranking, and supports various data sources and formats for effective information retrieval.

- Amazon DynamoDB provides millisecond response times for data retrieval and automatic scaling to handle varying workloads. This ensures quick access to the data needed for accurate responses.

Generation Component:

The generation component of this architecture is built on Amazon Bedrock, which is the foundation for its language processing capabilities. Amazon Bedrock hosts and manages the large language models. This includes models such as:

- Claude 3.5 Sonnet v2

- Llama 3.3 70B

- Mixtral 8x7B

- This RAG implementation is designed for future flexibility, capable of accommodating additional models as they become available in AWS GovCloud (US).

Robust Data Processing Pipeline

A sophisticated data processing pipeline helps to maintain a current and accurate knowledge base. This pipeline includes:

- Amazon S3 acts as a central data lake for raw documents with versioning and lifecycle management capabilities.

- Amazon Transcribe enables accurate transcription of audio and video content.

- AWS Lambda functions handle document ingestion, preprocessing, and text extraction.

- The indexing capabilities of Amazon Kendra provide automatic content classification, entity recognition, and semantic understanding.

This comprehensive strategy ensures that Alfred’s knowledge base stays current, accurate, and readily accessible. It’s an essential component of the application’s usefulness.

Monitoring, Security, and Compliance

Comprehensive monitoring is provided through Amazon CloudWatch, offering real-time performance metrics, custom dashboards, and automated alerts. Security is essential, implemented through multiple layers, including AWS Identity and Access Management (IAM) for role-based access control and AWS Key Management Service (AWS KMS) for centralized encryption key management.

Responsible AI

The AWS approach to responsible AI represents a comprehensive framework built on eight essential pillars designed to foster ethical and trustworthy AI development. At its core, the framework emphasizes safety and fairness through tools such as Amazon SageMaker Clarify, which organizations use to detect and mitigate potential biases in machine learning models. A key component of this framework is Amazon Bedrock Guardrails, which protects against harmful content while filtering against incorrect data.

Other aspects of the responsible AI framework include:

- Privacy and security implemented through encryption, access controls, and the AWS shared responsibility model.

- Transparency and explainability, promoting user understanding of decision-making with features such as chain-of-thought reasoning.

- Strong governance measures through tools such as SageMaker Role Manager and SageMaker Model Cards.

- Veracity through RAG and human-in-the-loop capabilities.

- Controllability to make sure that organizations maintain oversight with comprehensive monitoring and auditing tools.

Best Practices for Implementation

Organizations looking to implement a RAG solution need to follow these steps:

- Define a Clear Use Case: Begin with a clearly defined use case, specifying business objectives and target users.

- Prioritize Data Quality: Focus on data quality through robust validation and consistent formatting.

- Establish Security Assessments: Regular security assessments and compliance monitoring are essential.

- Continuously Optimize: Maintain continuous performance tracking and optimization.

Conclusion

The successful implementation of RAG on AWS GovCloud (US) demonstrates how this architecture can deliver powerful AI capabilities while fulfilling the highest standards of security and compliance. As AI technology continues to evolve, organizations must balance innovation with responsible deployment. By following these steps, organizations can create AI solutions that not only meet their immediate needs but position them for continued success.