In a significant development for the artificial intelligence (AI) sector, DeepSeek, an AI start-up company based in Hangzhou, China, has made waves with its innovative approach to model development.

DeepSeek’s Models: An Efficiency Revolution

DeepSeek’s recent achievements include the release of its large language model DeepSeek-V3 in December 2024. According to a report released by the DeepSeek team, this model demonstrated superior performance to OpenAI’s much-hyped large language model, GPT-4o, which was released in May 2024. What’s even more striking is the estimated cost of training DeepSeek-V3: reportedly, it was trained on only 2,048 Nvidia H800 chips, at a cost of approximately US$5.6 million.

This contrasts sharply with industry trends. For example, Meta’s Llama 3.1 405B model, launched in July 2024, required over 16,000 of the more advanced Nvidia H100 chips and is estimated to have cost over US$60 million in training. (The H800 graphics processing unit [GPU] is a modified version of the H100 GPU, tweaked by the US chipmaker Nvidia to make it legal for export to China.) This highlights a potential shift in the AI landscape, where algorithmic innovation may be as important or even more important than raw computing power.

Following DeepSeek-V3, the company released DeepSeek-R1, a reasoning model which offers performance comparable to the o1 models released by OpenAI in September 2024. These announcements from DeepSeek—and the fact such capabilities are apparently possible at a fraction of the cost and computing power of other leading large language models —stunned many. For an industry increasingly focused on advancing AI by building more data centers (with more Nvidia chips), it was a reminder of the value of algorithmic innovation and doing more with less. The announcement even sent technology stocks tumbling, with shares in Nvidia dropping 17% in a single day, translating to a loss of approximately US$600 billion in market value.

These developments come at a time when the AI industry is grappling with the energy consumption of advanced AI models, and while some have suggested that these new models could help, it is clear that the need for more efficient machine-learning models is clear, which should be combined with increased transparency around energy use and carbon footprints. Ultimately, though, new energy-efficient hardware will also be required to sustain the development of AI.

The Promise of Memristors

One promising avenue for achieving greater energy efficiency is the advancement of memristive devices (or memristors). These devices can perform both information processing and memory functions, enabling the creation of analogue compute-in-memory systems. Such systems can be more energy-efficient than conventional digital systems when used in neural network computations.

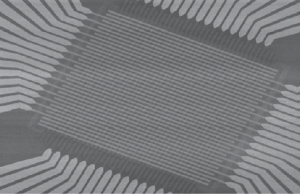

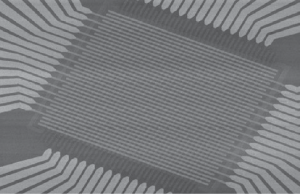

Scanning electron microscope image of a memristor array.

Recent research, as highlighted in Nature Electronics, showcases the potential of memristors. For example, an article detailed an analogue system built on a memristor array, which could be used for AI edge-computing applications. Researchers from various institutes in the Republic of Korea constructed these arrays from interfacial-type titanium oxide memristors. These memristors exhibit high reliability and linearity. The research demonstrated the system’s effective real-time video processing capabilities. The system was able to separate video foreground and background without requiring prior training or compensation algorithms.

In related work, researchers from various institutes in China also reported using memristor arrays to create an analogue-digital compute-in-memory architecture designed for general neural network inference. This architecture provided native support for floating-point-based complex regression tasks. Furthermore, it offered improved accuracy and energy efficiency when compared to pure analogue compute-in-memory systems.

DeepSeek’s success, coupled with the advancements in memristor technology, suggests a future where AI development prioritizes both algorithmic ingenuity and energy efficiency. This shift could redefine the standards by which AI systems are measured, placing a greater emphasis on performance and efficiency than on sheer computational power.