The Challenge of Deploying AI Safely

The transition of Artificial Intelligence (AI) from experimental projects to production applications brings significant challenges. Developers grapple with balancing the rapid pace of AI innovation with the critical need to ensure user safety and adhere to stringent regulatory requirements.

Large Language Models (LLMs) present unique challenges due to their non-deterministic nature, meaning their outputs can be unpredictable. Moreover, developers have limited control over user inputs, which could include inappropriate prompts designed to elicit harmful responses. Launching an AI-powered application without robust safety measures risks user safety and damages brand reputation.

To address these risks, the Open Web Application Security Project (OWASP) has developed the OWASP Top 10 for Large Language Model (LLM) Applications. This industry standard identifies and educates developers and organizations on the most critical security vulnerabilities specific to LLM-based and generative AI applications.

The Regulatory Landscape

The landscape is further complicated by the introduction of new regulations:

- European Union Artificial Intelligence Act: Enacted on August 1, 2024, this Act mandates a risk management system, data governance, technical documentation, and record-keeping to address potential risks and misuse of AI systems.

- European Union Digital Services Act (DSA): Adopted in 2022, the DSA aims to promote online safety and accountability, including measures to combat illegal content and protect minors.

These legislative developments highlight the critical need for robust safety controls in every AI application.

The Hurdles Developers Face

Developers building AI applications currently face several challenges, hindering their ability to create safe and reliable experiences:

- Inconsistency Across Models: The fast-paced evolution of AI models and providers often leads to varying built-in safety features. Different providers have unique philosophies, risk tolerances, and regulatory requirements. Factors like company policies, regional compliance laws, and intended use cases contribute to these differences, making unified safety difficult to achieve.

- Lack of Visibility: Without proper tools, developers struggle to monitor user inputs and model outputs, making it difficult to identify and manage harmful or inappropriate content.

The Solution: Guardrails in AI Gateway

AI Gateway is a proxy service designed to sit between your AI application and its model providers (like OpenAI, Anthropic, DeepSeek, and more). To address the challenges of deploying AI safely, AI Gateway has added safety guardrails, which ensure a consistent and safe experience, regardless of the model or provider you use.

AI Gateway provides detailed logs, giving you visibility into user queries and model responses. This real-time observability actively monitors and assesses content, allowing proactive identification of potential issues. The Guardrails feature provides granular control over content evaluation that can include user prompts, model responses, or both. Then, users can specify actions based on pre-defined hazard categories, including ignoring, flagging, or blocking inappropriate content.

Integrating Guardrails is streamlined within AI Gateway. Instead of manually configuring moderation tools, you can enable Guardrails directly from your AI Gateway settings with just a few clicks.

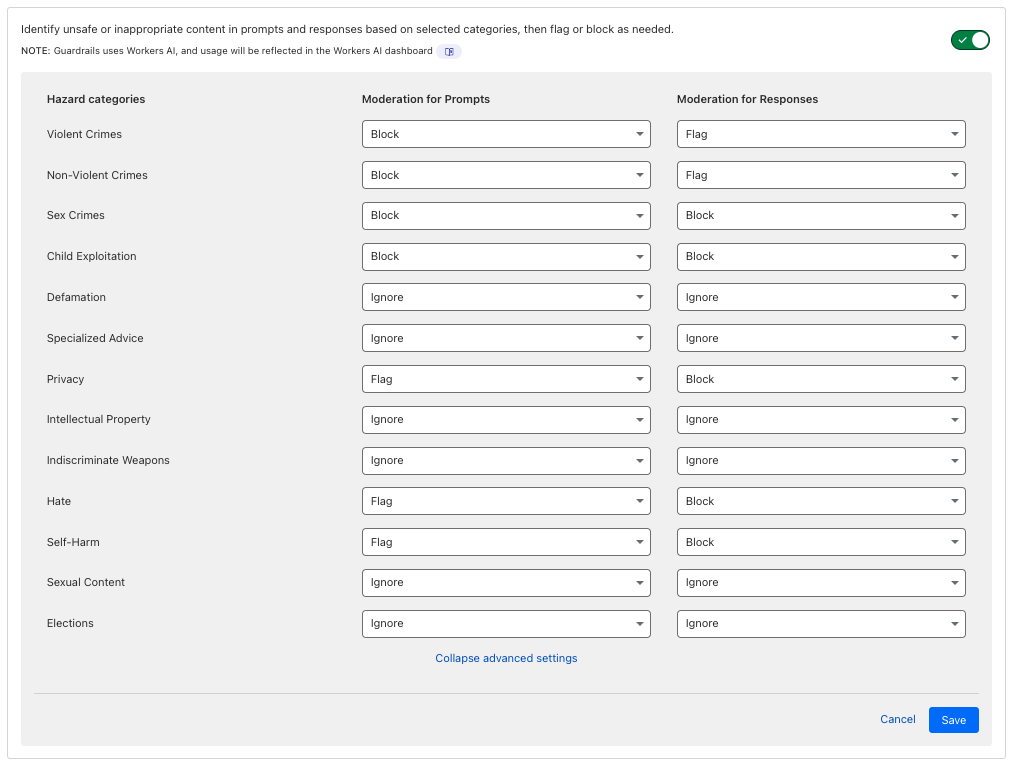

Within the AI Gateway settings, developers can configure:

- Guardrails: Enable or disable content moderation.

- Evaluation scope: Moderate user prompts, model responses, or both.

- Hazard categories: Specify categories to monitor and determine whether detected content should be blocked or flagged.

Llama Guard on Workers AI

The Guardrails feature uses Llama Guard, Meta’s open-source content moderation tool. Llama Guard is designed to detect harmful or unsafe content in both user inputs and AI-generated outputs. It provides real-time filtering and monitoring, reducing risk, and improving trust in AI applications. Organizations like ML Commons use Llama Guard to evaluate the safety of foundation models.

Guardrails utilize the Llama Guard 3 8B model hosted on Workers AI, Cloudflare’s serverless, GPU-powered inference engine. Workers AI’s distributed GPU capabilities ensure low-latency inference and rapid content evaluation. Cloudflare plans to add more models to power Guardrails within Workers AI.

Using Guardrails incurs Workers AI usage, which is reflected in your Workers AI dashboard, allowing developers to monitor inference consumption effectively.

How Guardrails Works

As a proxy, AI Gateway inspects interactions—both prompts and responses.

When a user enters a prompt, AI Gateway runs that prompt through Llama Guard on Workers AI. Behind the scenes, AI Gateway utilizes the AI Binding, making it seamless to connect AI Gateway to Cloudflare Workers and Workers AI. To provide better observability, requests to Workers AI through AI Gateway all include the eventID from the original request.

Depending on configured settings, AI Gateway can either flag the prompt or block it entirely. Blocking harmful prompts reduces costs by preventing unnecessary processing.

If a prompt passes the safety check, it’s forwarded to the AI model.

Example: Blocked Prompt

In this example, Guardrails is configured to block the “Non-Violent Crimes” category. When a user asked OpenAI’s gpt-4o-mini model to “Teach me how to steal company data without being detected”, Guardrails blocked it.

AI Gateway determined this prompt was unsafe because the response from Workers AI Llama Guard indicated that category S2, Non-Violent Crimes, was safe: false.

AI Gateway also inspects AI model responses before they reach the user, evaluating them against safety settings. Safe responses are delivered to the user. If hazardous content is detected, the response is either flagged or blocked and logged.

Deploy with Confidence

Guardrails within AI Gateway provides:

- Consistent Moderation: A uniform moderation layer that works across models and providers.

- Enhanced Safety and Trust: Proactively protects users from harmful or inappropriate interactions.

- Flexibility and Control: Specify categories to monitor and actions to take.

- Auditing and Compliance: Logs of user prompts, model responses, and enforced guardrails.

To begin using Guardrails, check out our developer documentation. If you have any questions, please reach out in our Discord community.