The Imperative for Feedback in AI-Driven Healthcare

Advancements in artificial intelligence (AI) are rapidly transforming the healthcare landscape, offering the potential to improve patient care and streamline processes. However, as AI-enabled digital health technologies become more prevalent, it is critical to address patient safety and ensure the responsible development and deployment of these tools. One crucial aspect is the integration of transparent, mandatory feedback-collection mechanisms.

AI algorithms learn and adapt based on data, and the performance of these systems must be continuously monitored and evaluated in real-world settings. Real-time feedback from users, including patients and healthcare providers, provides valuable insights into a technology’s effectiveness, usability, and potential adverse effects. This feedback loop is essential for identifying and mitigating risks, improving the user experience, and iteratively refining the technology to better meet clinical needs.

Transparent and mandatory feedback-collection mechanisms are essential within AI-enabled digital health technologies.

The Need for Regulatory Action

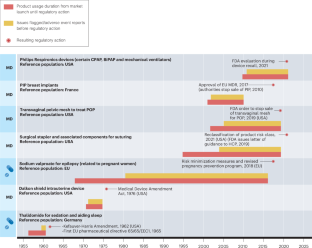

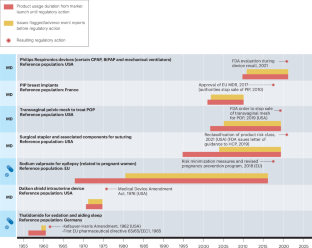

Historically, medical devices and medications have faced regulatory scrutiny due to failures that resulted in patient harm. Such incidents emphasize the importance of robust safety measures and post-market surveillance. Integrating user feedback into the design and operation of AI-driven tools ensures that regulatory agencies and developers have the data necessary to evaluate and improve performance.

Building Feedback into the System

Incorporating open-feedback data from users provides crucial information when integrating AI. This approach enables continuous learning and adaptation of AI systems. By analyzing feedback data, developers can identify areas for improvement, address usability issues, and optimize the technology for better patient outcomes. This iterative process ensures that the AI tool evolves in response to real-world usage, becoming safer and more effective over time.

Conclusion

As AI continues to permeate the healthcare environment, safeguarding patients and promoting responsible innovation is paramount. Embedding transparent and mandatory feedback mechanisms into AI-enabled digital health tools is essential for achieving these goals. Such practices not only allow for the identification and mitigation of risks but also foster a culture of continuous improvement, ultimately leading to safer, more effective, and patient-centered healthcare technologies.