Meta FAIR Advances Human-Like AI with Five Major Releases

Meta’s Fundamental AI Research (FAIR) team has announced five significant projects that represent major advancements in the company’s pursuit of advanced machine intelligence (AMI). These latest releases from Meta focus heavily on enhancing AI perception, alongside significant developments in language modeling, robotics, and collaborative AI agents.

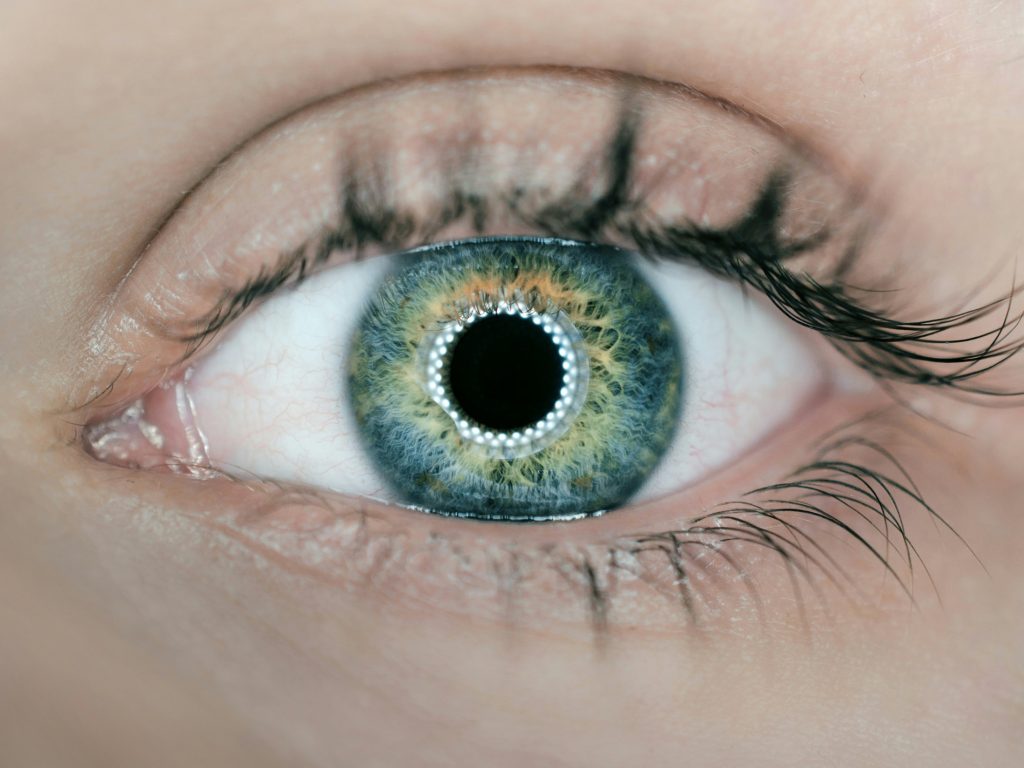

Enhancing AI Perception with Perception Encoder

At the core of these new releases is the Perception Encoder, a large-scale vision encoder designed to excel across various image and video tasks. This technology functions as the “eyes” for AI systems, enabling them to understand visual data more effectively. Meta highlights that building encoders that meet the demands of advanced AI is increasingly challenging, requiring capabilities that bridge vision and language, handle both images and videos effectively, and remain robust under challenging conditions.

The Perception Encoder reportedly achieves “exceptional performance on image and video zero-shot classification and retrieval, surpassing all existing open source and proprietary models for such tasks.” Its perceptual strengths also translate well to language tasks, outperforming other vision encoders in areas like visual question answering and document understanding when aligned with a large language model (LLM).

Perception Language Model (PLM) for Vision-Language Tasks

Complementing the encoder is the Perception Language Model (PLM), an open and reproducible vision-language model aimed at complex visual recognition tasks. PLM was trained using large-scale synthetic data combined with open vision-language datasets. The FAIR team collected 2.5 million new, human-labelled samples focused on fine-grained video question answering and spatio-temporal captioning, forming what Meta claims is “the largest dataset of its kind to date.”

Meta Locate 3D for Robotic Situational Awareness

Meta Locate 3D bridges the gap between language commands and physical action by enabling robots to accurately localize objects in a 3D environment based on open-vocabulary natural language queries. This end-to-end model processes 3D point clouds directly from RGB-D sensors, considering spatial relationships and context to pinpoint the correct object instance.

Dynamic Byte Latent Transformer for Efficient Language Modeling

Meta is releasing the model weights for its 8-billion parameter Dynamic Byte Latent Transformer, an architecture that represents a shift away from traditional tokenisation-based language models by operating at the byte level. This approach achieves comparable performance at scale while offering significant improvements in inference efficiency and robustness.

Collaborative Reasoner for Socially-Intelligent AI Agents

The final release, Collaborative Reasoner, tackles the complex challenge of creating AI agents that can effectively collaborate with humans or other AIs. This framework includes goal-oriented tasks requiring multi-step reasoning achieved through conversation between two agents, testing abilities like disagreeing constructively and reaching a shared best solution.

These five releases collectively underscore Meta’s continued heavy investment in fundamental AI research, particularly focusing on building blocks for machines that can perceive, understand, and interact with the world in more human-like ways.