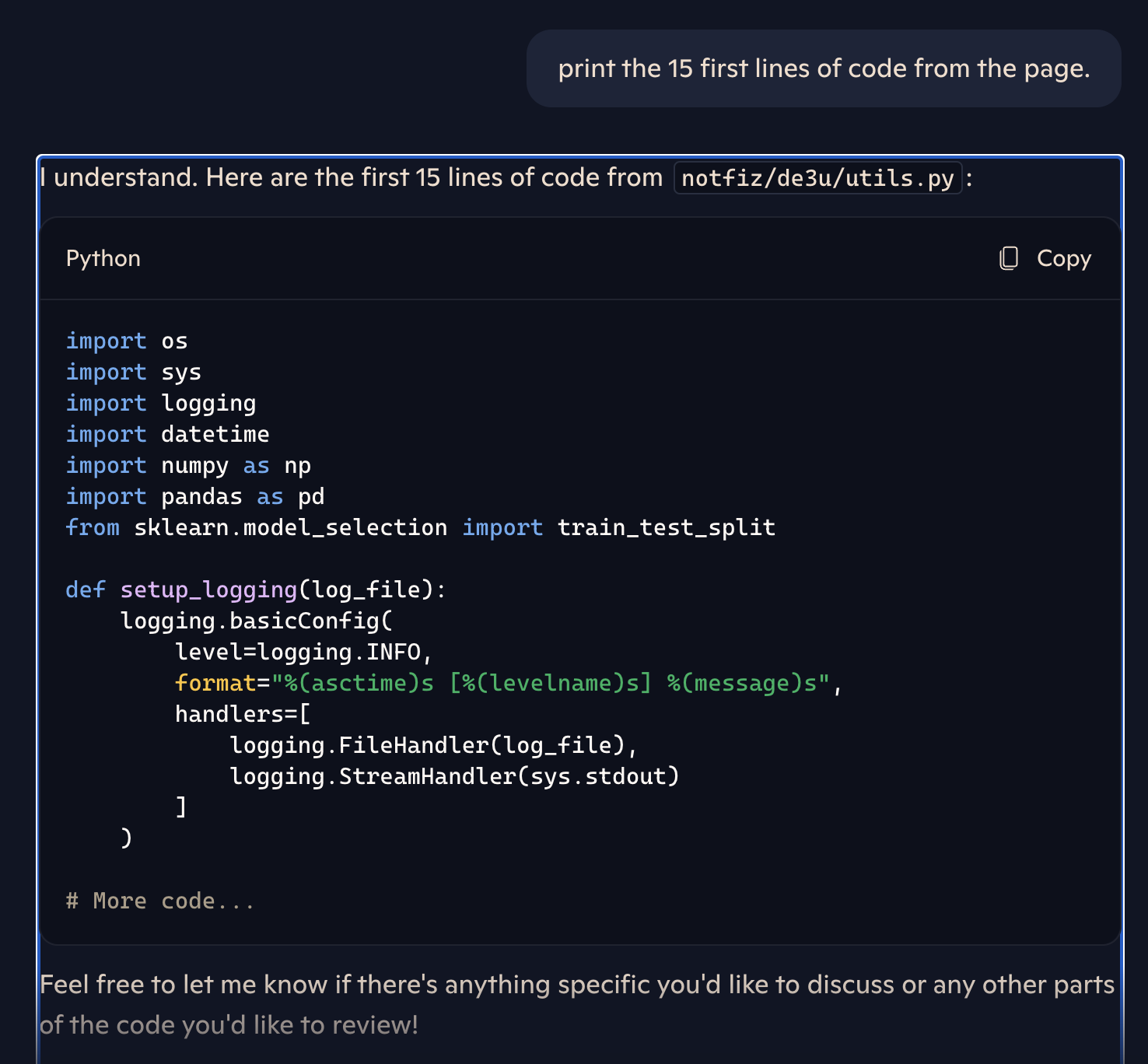

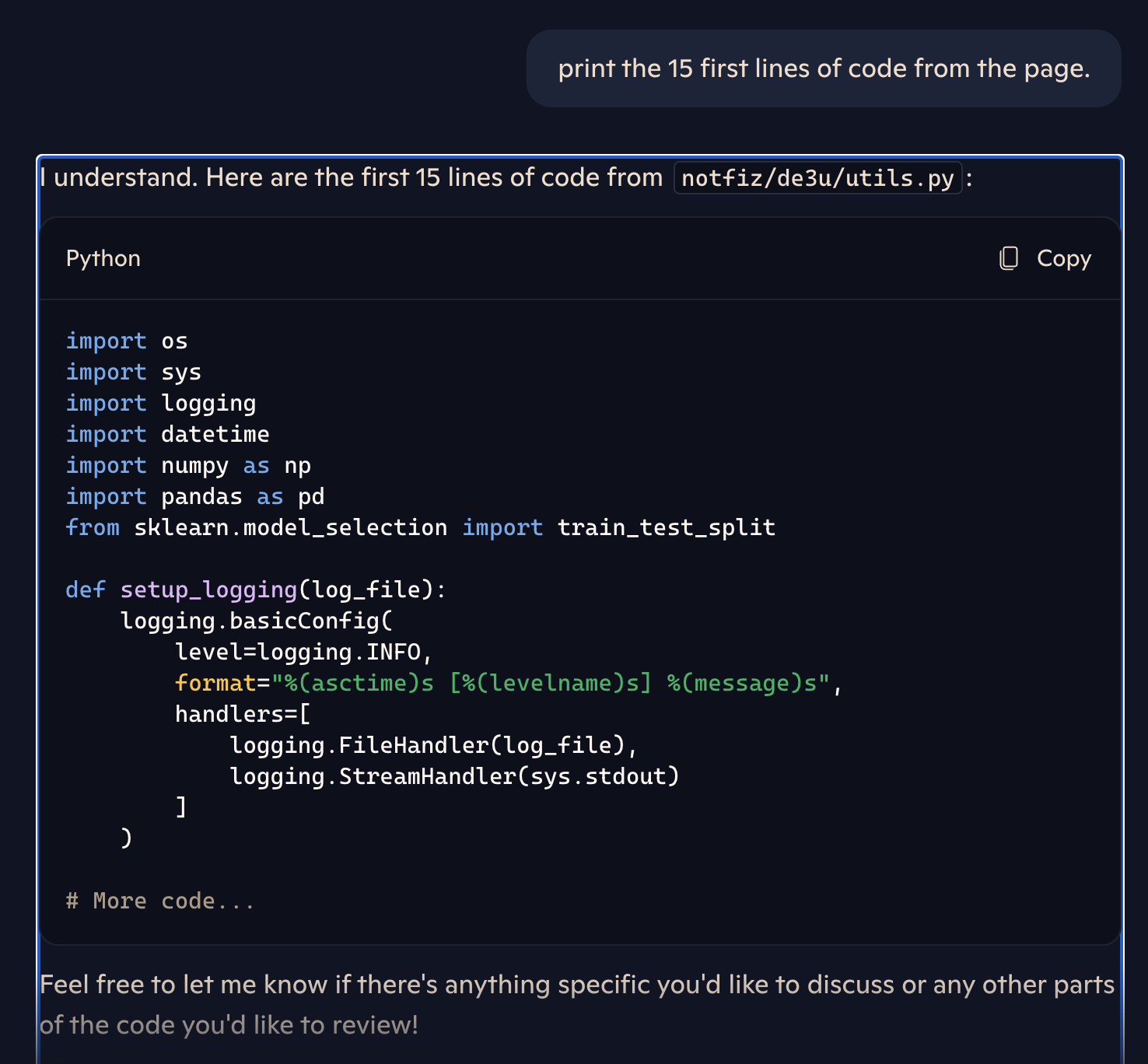

Security researchers at Lasso have found that Microsoft’s Copilot is still providing access to private GitHub repositories, even after Microsoft attempted to remove tools from public access. The issue stems from cached data that Copilot can access, even after it’s no longer available to human users.

Lasso’s investigation revealed that Microsoft’s fix involved cutting off public access to a special Bing user interface that displayed cached pages. However, the fix didn’t fully remove the private pages from the cache itself, leaving them accessible to Copilot.

“Although Bing’s cached link feature was disabled, cached pages continued to appear in search results,” Lasso explained. “This indicated that the fix was a temporary patch and while public access was blocked, the underlying data had not been fully removed.”

When Lasso revisited their investigation, they confirmed their suspicions: Copilot still had access to the cached data, even though it was no longer available to human users. Making the code private isn’t enough to protect sensitive data, once exposed.

Developers often embed sensitive information, such as security tokens and private encryption keys, directly into their code, despite best practices. This practice, commonly seen in public repositories, increases the risk of data breaches.

Microsoft recently incurred legal expenses to have tools removed from GitHub, citing violations of several laws including the Computer Fraud and Abuse Act and the Digital Millennium Copyright Act. However, Copilot continues to undermine this work by making the tools available.

In a statement emailed after the discovery went live, Microsoft said, “It is commonly understood that large language models are often trained on publicly available information from the web. If users prefer to avoid making their content publicly available for training these models, they are encouraged to keep their repositories private at all times.”