Microsoft Introduces Energy-Efficient AI Model for Regular CPUs

Researchers at Microsoft have developed a new AI model that can operate on standard central processing units (CPUs) rather than requiring specialized graphics processing units (GPUs). This innovation has the potential to significantly reduce the energy demands associated with running AI applications.

The new model, called BitNet b1.58 2B4T, achieves this breakthrough by abandoning the traditional use of floating-point numbers for storing and processing weights. Instead, it employs a 1-bit architecture that limits weights to three values: -1, 0, and 1. This simplification allows the model to perform computations using only addition and subtraction, operations that can be efficiently handled by CPUs.

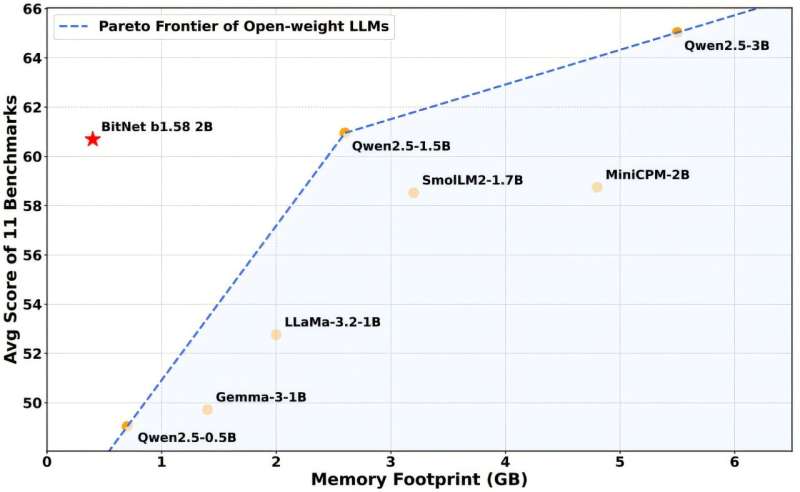

Testing has shown that BitNet b1.58 2B4T can match or even outperform some GPU-based models within its class size while using substantially less memory and energy. To facilitate the operation of this new model type, the researchers created a dedicated runtime environment called bitnet.cpp, optimized for the 1-bit architecture.

The implications of this development are significant. If the results hold up, it could enable users to run AI chatbots directly on their personal computers or mobile devices without relying on large data centers. This shift would not only reduce energy consumption but also enhance privacy by allowing for localized processing that doesn’t require an internet connection.

The researchers have detailed their work in a paper published on the arXiv preprint server. Their findings suggest that this technology could be a game-changer in making AI more accessible and sustainable.