Microsoft is pursuing legal action against the developers of malicious tools designed to circumvent the safety measures of its generative AI services, including the Azure OpenAI Service. An amended complaint names key individuals involved in this cybercrime operation, which Microsoft tracks as Storm-2139. The company aims to halt their activities, dismantle their illicit network, and deter others from misusing its AI technology.

The named defendants are:

- Arian Yadegarnia aka “Fiz” of Iran

- Alan Krysiak aka “Drago” of the United Kingdom

- Ricky Yuen aka “cg-dot” of Hong Kong, China

- Phát Phùng Tấn aka “Asakuri” of Vietnam

These individuals are central to the Storm-2139 network, which exploited compromised customer credentials to unlawfully access generative AI services. They then modified the capabilities of these services and sold access to other malicious actors. This access included detailed instructions on generating harmful and illicit content, such as non-consensual intimate images and other sexually explicit material. Microsoft’s terms of use explicitly prohibit these activities, which required deliberate attempts to bypass built-in safeguards. To protect the identities of potential victims and prevent the further spread of harmful content, Microsoft has not named specific celebrities and has excluded synthetic imagery and prompts from its filings.

Storm-2139: A Global Cybercrime Network

Microsoft’s Digital Crimes Unit (DCU) initially filed a lawsuit in December 2024 in the Eastern District of Virginia against 10 unidentified “John Does” involved in activities violating both U.S. law and Microsoft’s Acceptable Use Policy and Code of Conduct. This initial filing helped Microsoft gather more information about the criminal enterprise’s operations.

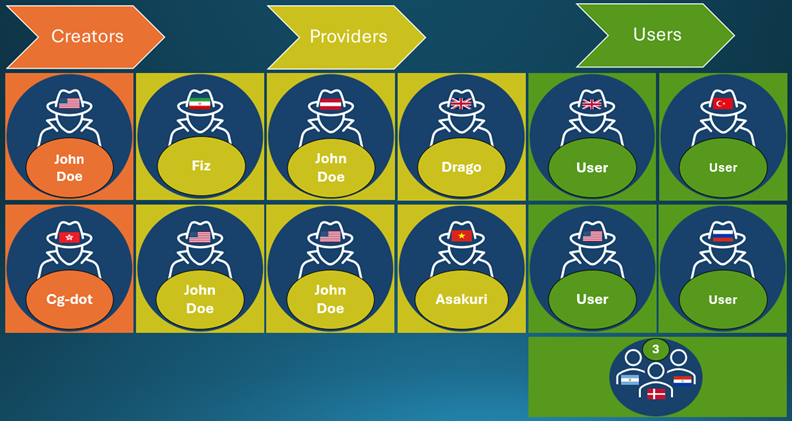

Storm-2139 is organized into three categories:

- Creators: Developed the tools used to abuse AI services.

- Providers: Modified and supplied these tools to end users, often with varying service tiers and payment models.

- Users: Utilized the tools to generate violating synthetic content, often featuring celebrities and sexual imagery.

Microsoft’s investigation has identified several actors, including the four named defendants. While two actors located in the United States (Illinois and Florida) have also been identified, their identities are not being disclosed to avoid interference with potential criminal investigations. Microsoft is preparing criminal referrals for U.S. and international law enforcement agencies.

Disrupting the Operation

As part of the initial filing, the court issued a temporary restraining order and preliminary injunction, enabling Microsoft to seize a website used by the cybercriminals. This action effectively disrupted the group’s ability to operate.

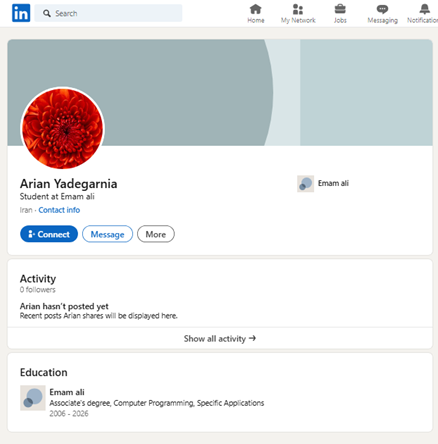

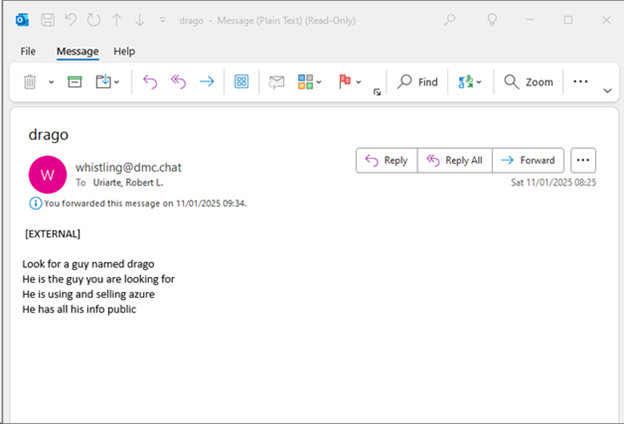

The seizure of the website and the unsealing of the legal filings in January prompted an immediate reaction from the actors. Observations on monitored communication channels revealed speculation about the “John Does” and potential consequences. Certain members of the group revealed or “doxed” Microsoft’s counsel of record, posting their names, personal information, and in some cases, photographs.

Microsoft’s counsel received emails, including communications from suspected Storm-2139 members trying to shift blame. This reaction underscores the effectiveness of Microsoft’s legal actions in disrupting cybercriminal networks by seizing infrastructure, which also acts as a powerful deterrent.

Microsoft’s Commitment to Combatting AI Abuse

Microsoft is committed to addressing the misuse of AI and the lasting negative impact of abusive content on victims. The company is continuously working to enhance AI guardrails and protect its services from illegal and harmful content. Microsoft outlined a comprehensive approach to fighting abusive AI-generated content last year. This included:

- Publishing a whitepaper with recommendations for U.S. policymakers to modernize criminal law and equip law enforcement to bring bad actors to justice.

- Providing an update on its strategy to combat intimate image abuse, detailing steps taken to protect its services.

Microsoft emphasizes that combating malicious actors is a long-term commitment. By identifying and exposing these individuals and their harmful actions, Microsoft wants to set a precedent in the fight against the misuse of AI technology.