A recent paper from researchers at Cohere, Stanford, MIT, and Ai2 has accused LM Arena, the organization behind the popular crowdsourced AI benchmark Chatbot Arena, of allowing certain major AI companies to gain an unfair advantage in leaderboard rankings. The study alleges that companies like Meta, OpenAI, Google, and Amazon were permitted to privately test multiple variants of their AI models on the platform, then selectively publish only the highest-scoring models.

The Controversy

Chatbot Arena, created in 2023 as an academic research project out of UC Berkeley, has become a significant benchmark for AI companies. It operates by pitting answers from two different AI models against each other in a “battle,” with users voting on which response is better. The votes contribute to a model’s score and its placement on the leaderboard. The researchers analyzed over 2.8 million Chatbot Arena “battles” between November 2024 and March 2025, finding evidence that certain major AI companies were allowed to collect more data through increased “battle” participation.

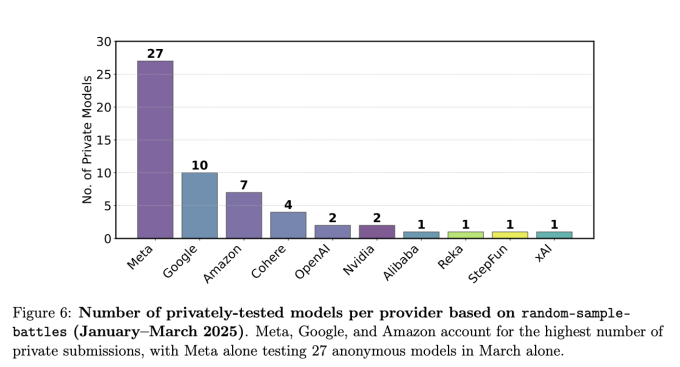

The authors claim this preferential access gave these companies a significant advantage. For instance, Meta was allegedly able to privately test 27 model variants between January and March leading up to its Llama 4 release, ultimately publishing only the score of a single top-performing model. Sara Hooker, Cohere’s VP of AI research and co-author of the study, described this practice as “gamification.”

Response from LM Arena

LM Arena Co-Founder and UC Berkeley Professor Ion Stoica disputed the findings, calling the study “full of inaccuracies” and “questionable analysis.” The organization maintained its commitment to “fair, community-driven evaluations” and invited all model providers to submit more models for testing. LM Arena argued that allowing model providers to choose how many tests to submit does not constitute unfair treatment.

Implications and Recommendations

The researchers recommend several changes to make Chatbot Arena more fair, including setting a clear limit on private tests and publicly disclosing scores from these tests. They also suggest adjusting the sampling rate to ensure all models appear in an equal number of “battles.” While LM Arena has rejected some of these suggestions, it has indicated plans to implement a new sampling algorithm.

The controversy comes as Meta was recently caught optimizing a Llama 4 model for “conversationality” to achieve a high score on Chatbot Arena, without releasing the optimized model. This incident, combined with the new study, increases scrutiny on private benchmark organizations and their potential susceptibility to corporate influence.