Nvidia Faces Pressure Amidst Rising AI Costs and Intensified Competition

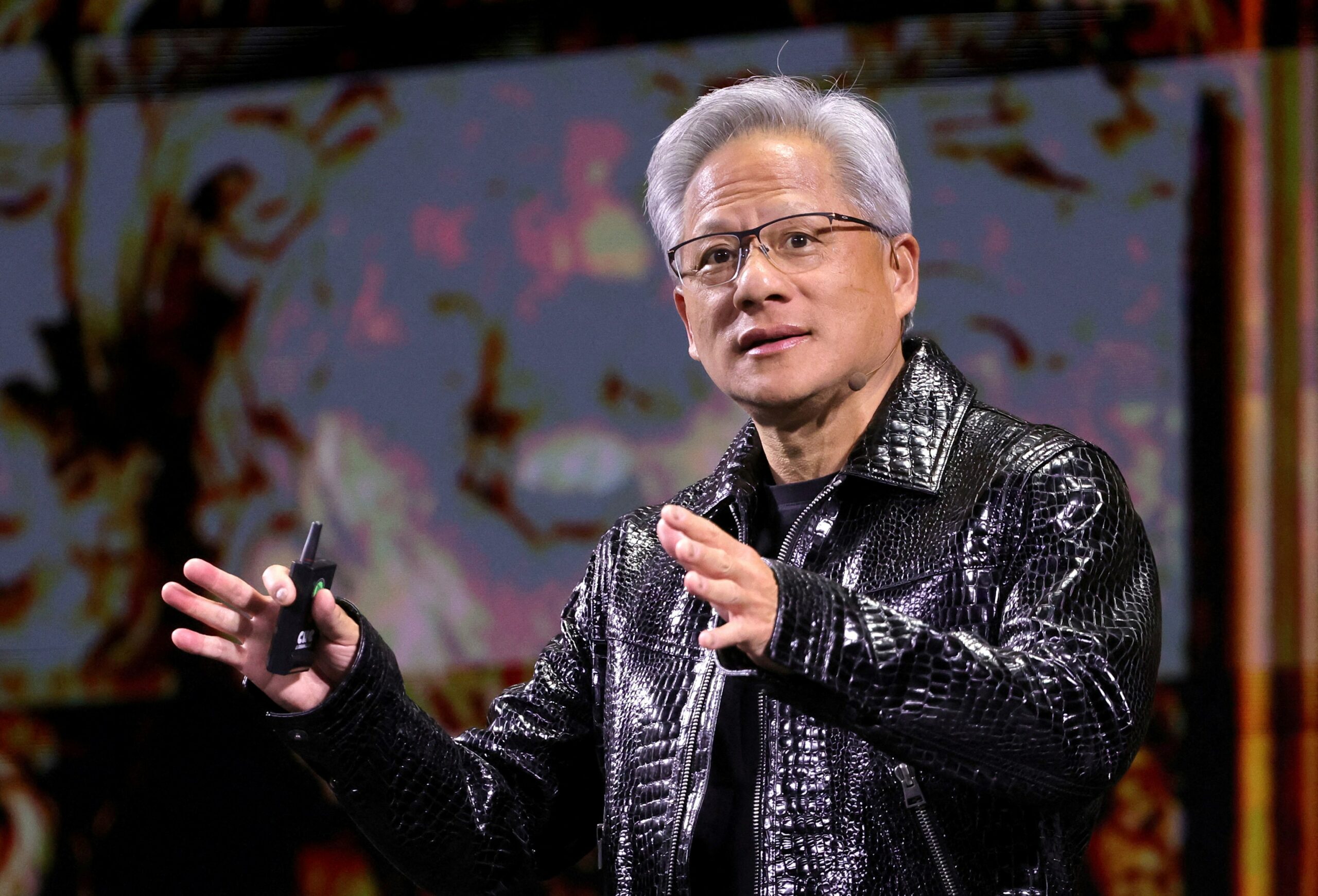

When Nvidia CEO Jensen Huang took the stage at the company’s annual software developer conference, the focus was clear: defending a nearly $3 trillion chip company’s market dominance. The pressure is mounting as Nvidia’s biggest customers scrutinize the soaring costs of artificial intelligence.

Nvidia’s conference comes on the heels of a competitive move by China’s DeepSeek, which unveiled a chatbot it claims requires less computing power than its rivals. Concerns over the cost of computing power have put pressure on Nvidia (NVDA.O) as their revenue largely comes from selling chips that cost tens of thousands of dollars apiece. Despite this, Nvidia’s revenue more than quadrupled over the past three years, reaching $130.5 billion.

At the conference, Nvidia is expected to unveil details of its Vera Rubin chip system, named after the American astronomer who advanced the concept of dark matter. Mass production of the system is slated for later this year. This announcement follows the delayed market entry of Rubin’s predecessor, the David Blackwell chip, whose production setbacks impacted Nvidia’s margins.

Nvidia CEO Jensen Huang delivers a keynote address at CES 2025 in Las Vegas, Nevada.

The Shift from Training to Inference

Technological shifts in the AI market pose a challenge to Nvidia’s core business. Previously focused on ‘training,’ which involves feeding AI models vast datasets, the industry is increasingly driven by ‘inference,’ where models use their accumulated knowledge to generate user responses.

While Nvidia holds over 90% of the training market share, competition is growing in inference. The degree of market share those competitors capture will hinge on how inference computing unfolds.

The Rise of Inference and Competitive Threats

Inference computing covers a wide spectrum, from smartphone applications to data centers that generate complex financial analyses. Numerous startups in Silicon Valley and beyond, along with Nvidia’s traditional competitors like Advanced Micro Devices (AMD.O), are vying to offer chips that deliver the same results at a lower cost — especially for electricity, where Nvidia’s chips are resource intensive to operate — so much so that some AI companies are considering nuclear reactors to power them.

“They have a hammer, and they’re just making bigger hammers,” said Bob Beachler, VP at Untether AI, one of many startups challenging Nvidia in inference markets. “They own the (training) market. And so every new chip they come out with has a lot of training baggage.”

Nvidia’s Strategy: Reasoning and Expanding Markets

Nvidia argues that a new form of AI, known as “reasoning,” plays to its strengths. Reasoning chatbots reflect, creating several lines of text and then evaluating themselves on the problem. Nvidia chips have an advantage with these computing-intensive processes.

“The market for inference is going to be many times bigger than the training market,” Jay Goldberg, CEO of D2D Advisory, stated. “As inference becomes more important, their percentage share will be lower, but the total market size and the pool of revenues could be much, much larger.”

Beyond chatbots, Nvidia is exploring other computing markets. The company plans to use new AI methods to improve chatbots and make robots more useful.

A key focus will be quantum computing. Following Huang’s comments in January that quantum computing was decades away, which affected companies invested in the technology, Microsoft (MSFT.O) and Google (GOOGL.O) countered that it was closer to practical application. Nvidia will dedicate a full day of its conference to the quantum industry and its plans.

Additionally, Nvidia is pursuing the development of a central processor chip, a project first reported by Reuters.

“It could eat into what’s left of the Intel market,” said Maribel Lopez, an independent technology industry analyst.