OmniParser V2: Revolutionizing GUI Automation

GUI automation relies on agents capable of understanding and interacting with user interfaces. However, general-purpose large language models (LLMs) often struggle with this task. Two major hurdles include reliably identifying interactable icons and accurately interpreting the semantics of on-screen elements to associate them with the correct actions.

OmniParser addresses these challenges by ‘tokenizing’ UI screenshots, transforming pixel data into a structure that LLMs can understand. This enables LLMs to predict the next action based on parsed, interactable elements. Building on the original, OmniParser V2 offers substantial improvements.

OmniParser V2 excels by providing increased accuracy in detecting even the smallest interactive elements and by offering dramatically faster inference times, making it a highly efficient tool for GUI automation. These advancements are a result of training with a larger dataset for interactive element detection and functional icon captions. By decreasing the icon caption model’s image size, OmniParser V2 boasts a 60% latency reduction compared to its predecessor.

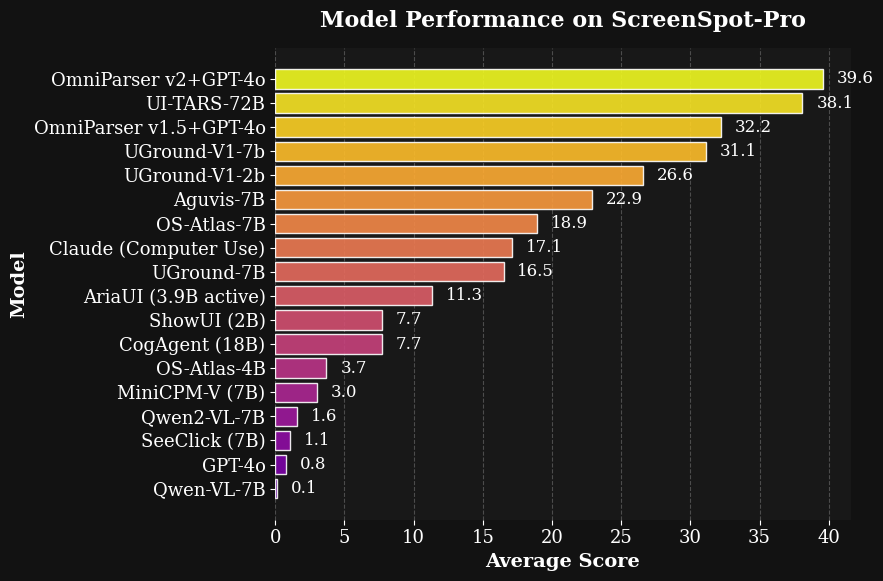

Notably, OmniParser, when paired with GPT-4o, achieved a state-of-the-art average accuracy of 39.6 on ScreenSpot Pro, a recently released grounding benchmark featuring high-resolution screens and tiny target icons. This is a large leap from GPT-4o’s previous score of 0.8.

To facilitate rapid experimentation with different agent settings, the team created OmniTool, a Dockerized Windows system equipped with essential tools for agents. Out of the box, OmniParser can be used with various cutting-edge LLMs: OpenAI (4o/o1/o3-mini), DeepSeek (R1), Qwen (2.5VL), and Anthropic (Sonnet). This integration brings together screen understanding, grounding, action planning, and execution capabilities.

Addressing Risks and Promoting Responsible AI

In alignment with Microsoft’s AI principles and Responsible AI practices, risk mitigation is a priority. The icon caption model is trained using Responsible AI data to minimize the model’s potential to infer sensitive attributes (e.g., race, religion) of individuals present in icon images. Users are also encouraged to only apply OmniParser to screenshots that do not contain harmful content.

For OmniTool, a threat model analysis was conducted using the Microsoft Threat Modeling Tool. The team provides a sandbox Docker container, safety guidance, and examples in the project’s GitHub repository. Guidance recommends maintaining human oversight to further minimize risks.

Research Areas

- Artificial Intelligence

- Computer Vision