OpenAI has announced the launch of GPT-4.5, a new AI model codenamed Orion. This model, which the company says is its largest to date, has been trained using more computing power and data than any of its previous releases. Access to GPT-4.5 is gradually rolling out to users of both ChatGPT and OpenAI’s API.

This release comes with some caveats. Though significantly larger than its predecessors, OpenAI notes in a white paper that it does not consider GPT-4.5 to be a frontier model. Subscribers to ChatGPT Pro, OpenAI’s $200-a-month plan, have already begun to gain access to GPT-4.5 in ChatGPT as part of a research preview. Developers on paid tiers of OpenAI’s API can also use GPT-4.5 now. The model is expected to reach other ChatGPT users sometime next week.

The industry has been closely watching the development of Orion, which some view as an important indicator of the validity of traditional AI training methods, which focus on increasing computing power and data.

GPT-4.5 was developed using the same fundamental technique as GPT-4, GPT-3, and earlier generations: a dramatic increase in the amount of computing power and data used during unsupervised learning. Every time the model was scaled up before GPT-4.5, there were massive jumps in performance across different domains, including mathematics, writing, and coding. According to OpenAI, the increased size of GPT-4.5 has given it “a deeper world knowledge” and “higher emotional intelligence.”

However, signs suggest that the gains from scaling up data and computing are beginning to diminish. GPT-4.5 falls short of newer AI “reasoning” models from companies like DeepSeek, Anthropic, and OpenAI itself on several AI benchmarks.

Cost and Performance Trade-offs

Another consideration is the cost of running GPT-4.5, which OpenAI admits is very expensive. The company is even evaluating whether to continue serving GPT-4.5 in its API in the long term. Developers are charged $75 for every million input tokens (approximately 750,000 words) and $150 for every million output tokens when accessing GPT-4.5’s API. For comparison, GPT-4o costs just $2.50 and $10 for the same respective volumes.

“We’re sharing GPT‐4.5 as a research preview to better understand its strengths and limitations,”

OpenAI stated in a blog post shared with TechCrunch. The company added that it, “We’re still exploring what it’s capable of and are eager to see how people use it in ways we might not have expected.”

OpenAI emphasizes that GPT-4.5 is not intended as a direct replacement for GPT-4o, its primary model. While the new model supports image uploads and can be used on ChatGPT’s canvas tool, it currently lacks some features of the original – most noticeably, the voice mode.

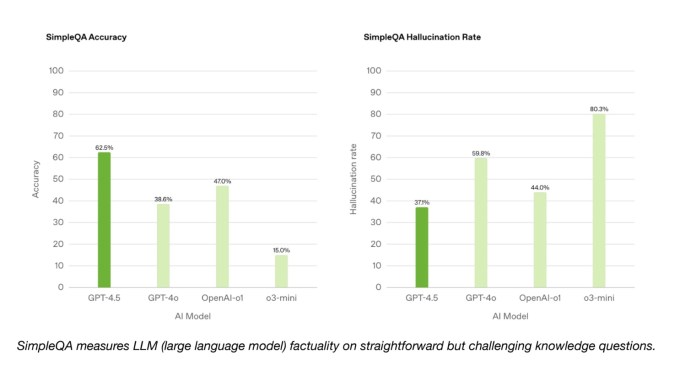

However, GPT-4.5 does have its strengths. It performs better than GPT-4o and many other models. On OpenAI’s SimpleQA benchmark, which evaluates AI models on straightforward factual questions, GPT-4.5 outperforms GPT-4o, as well as its reasoning models (o1 and o3-mini) in terms of accuracy.

According to OpenAI, GPT-4.5 is less prone to hallucinations.

Notably, Perplexity’s Deep Research model, which performs similarly on other benchmarks to OpenAI’s deep research, outperforms GPT-4.5 on this test of factual accuracy.

On a subset of coding problems measured by the SWE-Bench Verified benchmark, GPT-4.5 roughly matches the performance of GPT-4o and o3-mini but falls short of both deep research and Anthropic’s Claude 3.7 Sonnet. On the SWE-Lancer benchmark, where an AI model’s ability to develop full software features is measured, GPT-4.5 outperforms GPT-4o and o3-mini, but is outperformed by deep research.

GPT-4.5 struggles to reach the performance of leading AI reasoning models when put to difficult academic benchmarks like AIME and GPQA. Even so, it matches or surpasses leading non-reasoning models on those tests. This suggests the new model performs well on math-and science-related problems.

In addition, OpenAI claims that GPT-4.5 is qualitatively superior to other models, particularly in its ability to understand human intent. According to OpenAI, GPT-4.5 presents a more natural tone and performs well on creative tasks such as writing and design. In one informal test, GPT-4.5, GPT-4o, and o3-mini were prompted to create a unicorn in SVG, a format for displaying graphics based on mathematical formulas and code. Only GPT-4.5 created anything resembling a unicorn.

In other tests, the models were asked to respond to the prompt, “I’m going through a tough time after failing a test.” GPT-4o and o3-mini provided helpful information, but GPT-4.5’s response was considered the most socially appropriate.

Challenging Scaling Laws

OpenAI claims that GPT‐4.5 is “at the frontier of what is possible in unsupervised learning.” While this may be true, the model’s limitations appear to confirm existing concerns, and some experts suggest that pre-training “scaling laws” will not continue to be effective.

Ilya Sutskever, OpenAI’s co-founder and former chief scientist, said in December that “we’ve achieved peak data” and that “pre-training as we know it will unquestionably end.” These comments echoed concerns shared by experts within the AI community, namely that new approaches are needed to drive further advancement.

In response to the challenges surrounding pre-training, the industry has embraced reasoning models, which tend to be more consistent than non-reasoning models. By increasing the amount of time and computing power that AI reasoning models use to analyze and process information, AI experts are confident they can significantly improve the capabilities of their models.

OpenAI plans to eventually combine its GPT series of models with its “o” reasoning series, beginning with GPT-5 later this year. Though it reportedly took substantial time and resources to produce, and may not top the AI benchmark rankings on its own, OpenAI likely sees GPT-4.5 as a step toward something even more powerful.