The Paradox of AI Efficiency and Power Consumption

The adoption of more efficient AI technology mirrors a historical trend: when energy-efficient lightbulbs emerged it was expected that electricity consumption would drop. Instead, efficiency and lower costs spurred wider adoption, resulting in new applications and a surge in power consumption and infrastructure needs. Today, AI startups are achieving similar breakthroughs in efficiency, prompting some to anticipate a decline in infrastructure needs, but history suggests otherwise. As AI becomes more affordable and efficient, its adoption is expected to surge, leading to new use cases and generating unprecedented demand for computing power and infrastructure.

Rapidly increasing demand for AI is poised to fundamentally reshape power infrastructure planning and demand, pushing utilities to accelerate grid modernization and power generation capabilities. This transformation is only beginning, creating opportunities across the ecosystem, including data center developers, power producers, and suppliers of electrical and cooling components. For investors, the Global X Artificial Intelligence and Technology ETF (AIQ), the Global X Data Center and Digital Infrastructure ETF (DTCR), and the potential the Global X U.S. Electrification ETF (ZAP) represent investment opportunities to capitalize on this transformation.

Key Takeaways

- The AI boom could cause U.S. data centers to consume up to 12% of U.S. electricity by 2028.

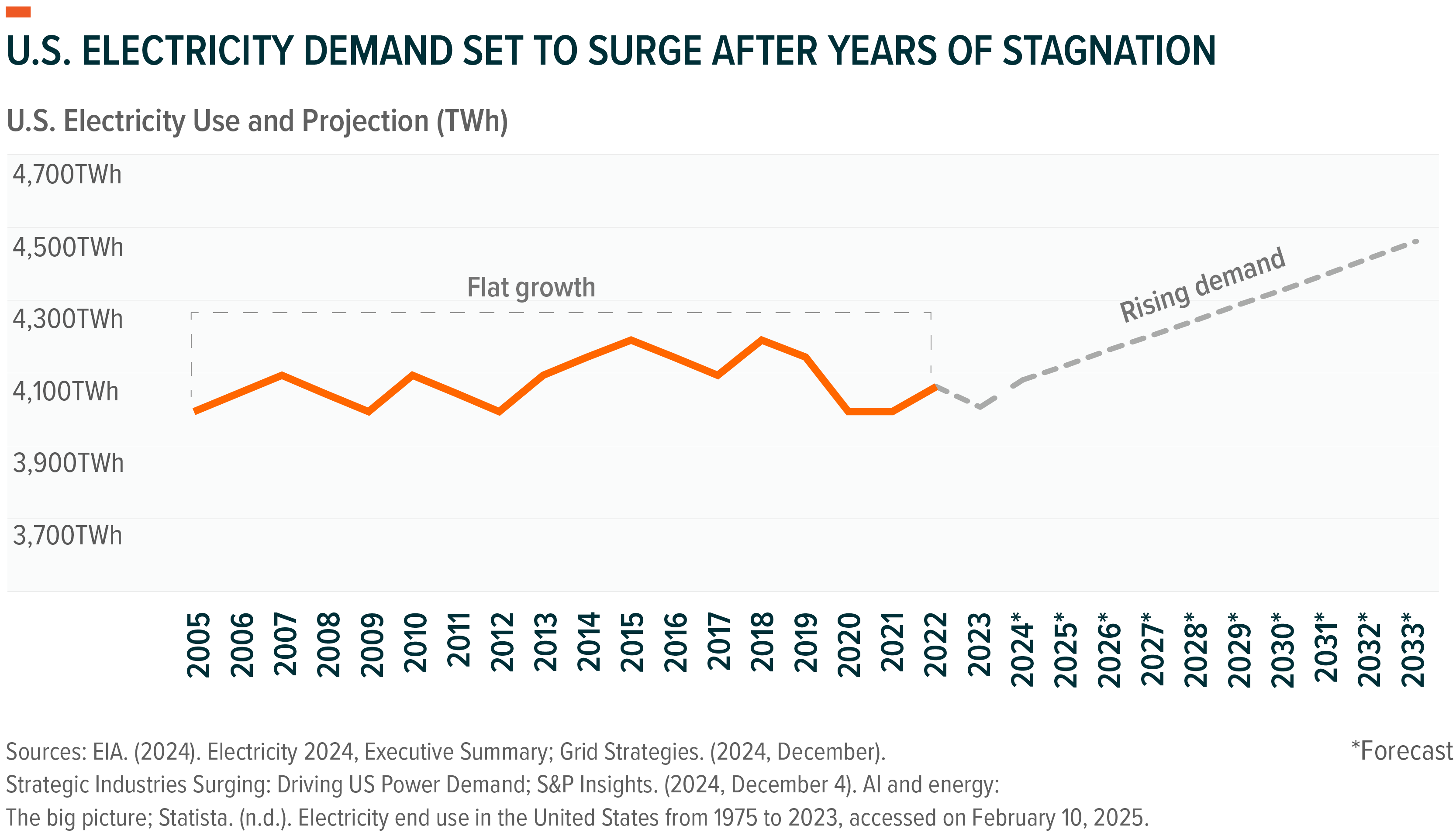

- After two decades of flat electricity growth, utilities could face a 47% increase in demand by 2040, emphasizing the need for grid upgrades and power generation.

- Decarbonizing the grid while modernizing power infrastructure demands significant investments, driving innovation in areas like nuclear power and energy storage systems.

The Power Costs of the Automation Age

The global economy, led by the U.S. technology industry, is rapidly transitioning from the Information Age to the Automation Age. This new era has machines, software, and systems that no longer simply process data but act on it autonomously. The Automation Age promises a surge in efficiency and productivity, powered by smart, agile, accessible AI, but this progress comes with an inevitable power cost as the technology industry trains, tests, deploys AI, and manufactures AI-related semiconductors.

Training Large-Scale AI Models

Training foundational AI models is a prime example of the energy-intensive nature of AI. For example, OpenAI used approximately 50 GWh of electricity to train GPT-4 – enough to power 6,000 U.S. homes for a year, and fifty times more electricity than was used to train GPT-3. Since GPT-4’s public launch in March 2023, infrastructure demands have only grown as companies deploy increasingly larger AI-GPU clusters to train next-generation models.

This trend is particularly visible in large tech companies. Meta Platforms plans to invest at least $60 billion in capital expenditure in 2025, anticipating 1.3 million GPUs by the end of that year; and xAI plans to spend $35–40 billion to grow its Colossus supercomputer to 1 million GPUs. Microsoft plans to spend $80 billion on AI infrastructure in fiscal year 2025.

Individual GPUs are also becoming more power-hungry. Nvidia’s Blackwell (GB200) chip, although significantly more power-efficient, is designed for nearly seven times the power draw of the A100 chips used to train GPT-3. By 2030, U.S. data centers could house millions of these advanced GPUs, which will also require significant energy for cooling.

Using AI Applications

Even the simple act of putting an AI model to work is energy intensive. A single ChatGPT query can consume 10 times more energy than a Google Search, which is enough electricity to power a light bulb for 20 minutes. More complex tasks like generating videos or high-quality AI images can require hundreds of times more energy. One minute of interaction with an AI voice assistant could consume up to 20 times the energy of a traditional phone call.

ChatGPT’s 180 million monthly users highlight current AI power demands, but the real surge is expected from agentic AI models interacting with each other. By 2030, the agentic AI market is projected to reach $47 billion, with billions of AI agents working autonomously on human-directed tasks. Anticipating this surge, hyperscalers are committing over $300 billion in capital expenditures for 2025, primarily for AI infrastructure.

Manufacturing AI Chips

Producing semiconductors requires significant energy. For example, Taiwan Semiconductor Manufacturing Company (TSMC) uses 8% of Taiwan’s electricity to run its chip fabrication facilities, a figure that could jump to 24% by 2030. As the United States increases chip production, starting with high-value chips, energy demand could rapidly increase. The first phase of TSMC’s fab facility in Phoenix, Arizona, requires 200 MW of peak connected load, or enough to power roughly 30,000 homes. By 2030, its power needs could grow nearly sixfold. Seventy-five semiconductor facilities are either planned or under construction in the United States, and by 2030, the country could produce 20% of the world’s most advanced chips further amplifying power needs.

Data Center Energy Demand and Infrastructure Challenges

U.S. data center electricity consumption is set to surge from 176 TWh in 2023 to approximately 580 TWh by 2028. Meeting just this demand will require U.S. power producers to add roughly 50 GW of new production capacity by 2030. This is a significant challenge compounded by rising power needs from semiconductor manufacturing and EV adoption. This could cost almost $60 billion in investments in new power generation.

Complicating matters, much of the U.S. grid dates back to the 1960s and faces growing stress. The average transformer is over 40 years old, and many power lines are operating beyond their capacity. This results in frequent maintenance issues and power outages, highlighting the urgent need for a robust infrastructure overhaul to meet growing demand and ensure reliability.

Historically, power companies have expanded generation and transmission capacity at a measured pace, responding to gradual catalysts like population growth over decades. Data centers operate on a different timeline, often going from groundbreaking to full operation in under two years. Data center growth is also highly regionalized, and increased demand will likely intensify localized energy bottlenecks. This makes targeted investments in substations, power redundancy, and fiber connectivity essential.

In 2023, just 15 states accounted for 80% of U.S. data center energy consumption, with Virginia alone comprising nearly 26%. Another challenge is reconciling the decarbonization of the grid with the need to rapidly scale power production infrastructure. In the United States, nearly 100 GW of coal-fired power plant capacity was phased out over the last 15 years, and another 68 GW will be phased out this decade. Major data center regions may face significant power shortfalls by 2030.

Investments in Energy Innovation Are Accelerating

Newly announced data centers primarily plan to utilize natural gas turbines for reliable, on-site power generation. While those systems can deliver consistent electricity, their emissions conflict with tech companies’ carbon neutrality commitments. Solar and wind power deployment is accelerating, but these intermittent sources can’t yet match data centers’ need for uninterrupted power, though advancing energy storage technologies are beginning to bridge this gap.

In our view, the data center energy landscape of the future involves nuclear energy, driven by small modular reactors (SMRs). We see SMRs as the most transformative development in energy, since they are compact, scalable, faster to deploy, and have much quicker construction timelines than traditional nuclear plants. They provide a steady, low-carbon power supply that aligns with corporate sustainability goals while boosting grid reliability. Innovation in SMR form factors, along with permitting and regulatory support to drive the industry forward, are critical to the evolution of the AI power story.

Tech companies are racing to secure nuclear power through partnerships with major utilities, signaling the urgency to scale energy infrastructure alongside growing AI demands.

Conclusion: Efficiency Leads to Increased Consumption

We believe that cheaper AI will fuel greater adoption, driving demand for AI infrastructure and amplifying power needs. Between now and 2030, industry estimates suggest that nearly $1 trillion of capital investment is needed to modernize the U.S. power grid and meet emerging power needs. Utilities and competitive power producers are scaling investments, and private capital is expected to follow, cumulatively creating opportunities for companies delivering power, supplying transformers, power management systems, and grid modernization solutions. We see this intersection of AI applications, AI infrastructure, and electrification as a positive theme for investors throughout the decade.