The future is inherently uncertain, much like the distant horizon obscured by haze and distance. We make educated guesses about what’s to come. Recently, a team of AI researchers and forecasters from institutions like OpenAI and The Center for AI Policy published the AI 2027 scenario, offering a detailed forecast for the next 2-3 years. This near-term prediction outlines a quarter-by-quarter progression of anticipated AI capabilities, including multimodal models achieving advanced reasoning and autonomy.

The forecast is noteworthy for its specificity and the credibility of its contributors, who have insight into current research pipelines. The most striking prediction is that artificial general intelligence (AGI) will be achieved in 2027, followed by artificial superintelligence (ASI) months later. AGI is expected to match or exceed human capabilities across virtually all cognitive tasks, while ASI will dramatically surpass human intelligence.

Expert Opinions and Implications

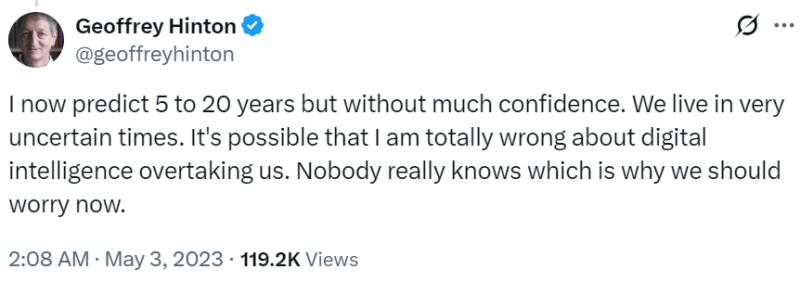

Not everyone agrees with these predictions. Ali Farhadi, CEO of the Allen Institute for Artificial Intelligence, expressed skepticism, stating that the forecast “doesn’t seem to be grounded in scientific evidence.” However, others, like Anthropic co-founder Jack Clark, view it as “the best treatment yet of what ‘living in an exponential’ might look like.” Anthropic CEO Dario Amodei also aligns with this timeline, suggesting that AI surpassing humans in almost everything will arrive in the next two to three years.

The potential arrival of AGI and ASI has significant implications. If achieved, it could lead to substantial job losses as organizations automate roles. Industries like customer service, content creation, and data analysis may face dramatic upheaval. Moreover, the philosophical implications are profound: if machines can think or appear to think, what does that mean for our understanding of self?

Preparing for the Future

Whether or not the AI 2027 predictions are correct, they are plausible and warrant preparation. For businesses, this means investing in AI safety research and organizational resilience. Governments need to develop regulatory frameworks addressing immediate and long-term risks. Individuals must focus on developing uniquely human skills like creativity and emotional intelligence while working effectively with AI tools.

The time for abstract debate is over; concrete preparation for near-term transformation is urgently needed. Our future will be shaped by the choices we make and the values we uphold, starting today.