Zoom Researchers Unveil Resource-Efficient AI Training Method

Engineers at Zoom Communications have developed a new method for training artificial intelligence (AI) systems that could significantly reduce the computational resources required. The approach, dubbed “Chain of Draft” (CoD), represents an evolution of the existing standard, “Chain of Thought” (CoT), and has demonstrated notable improvements in resource efficiency.

CoT, which breaks down problem-solving into sequential steps, mirrors human thought processes. However, the Zoom team observed that CoT often produces unnecessarily detailed, extensive solutions. According to the researchers, humans frequently omit or combine steps in problem-solving based on existing knowledge, streamlining the process.

CoD, the new method, mimics this human efficiency. The engineers achieved this by limiting the prompt engine to a maximum of five words per prompt. This constraint forced the AI to provide concise, essential steps.

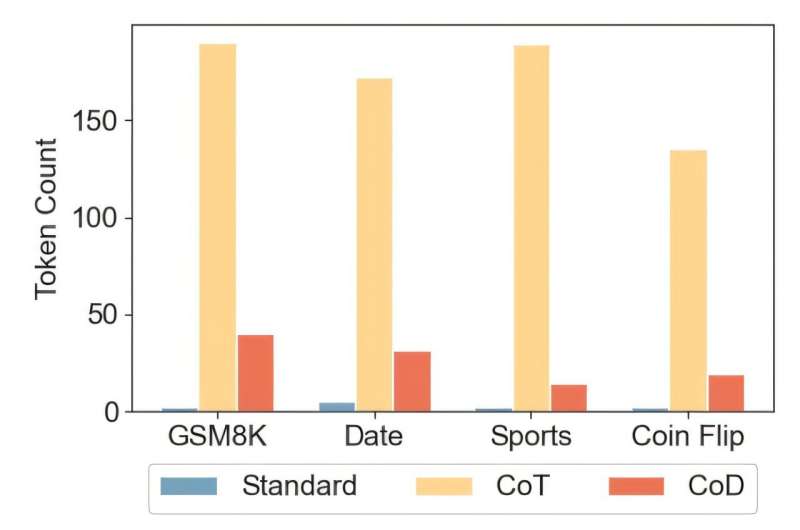

To evaluate CoD, the researchers modified AI models, including Claude 3.5 Sonnet, to utilize the new approach. They found that CoD drastically reduced the number of tokens needed to complete tasks. For instance, in a sports-related question scenario, the token usage dropped from 189.4 to 14.3, while concurrently boosting accuracy from 93.2% to 97.35%.

This methodology allowed LLMs to answer questions using significantly fewer words; in some cases, requiring only around 7.6% of the words required by traditional CoT models, while also improving accuracy.

The practical implications of CoD are substantial. By switching to CoD in applications like math, coding, and other logical tasks, organizations could see a dramatic reduction in computational resource consumption. This, in turn, would translate to decreased processing times and reduced associated costs.

The research team asserts minimal effort would be required to transition existing AI applications based on CoT to CoD. The code and relevant data for CoD are available on GitHub.

More information: Silei Xu et al, Chain of Draft: Thinking Faster by Writing Less, arXiv (2025). DOI: 10.48550/arxiv.2502.18600

Code and data: github.com/sileix/chain-of-draft